Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Should I "no-index" two exact pages on Google results?

-

Hello everyone,

I recently started a new wordpress website and created a static homepage.

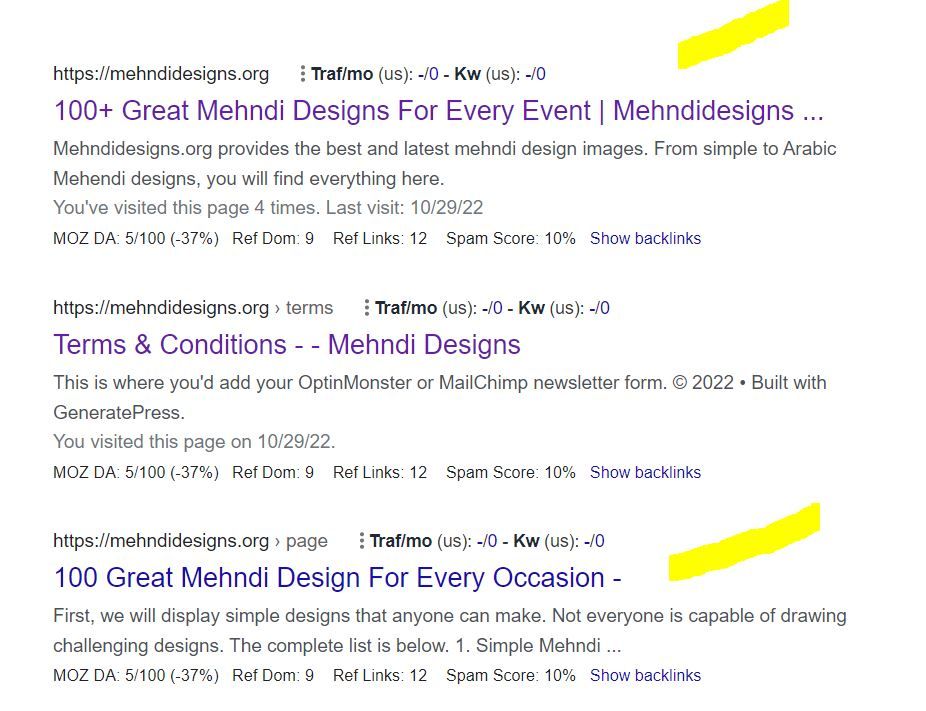

I noticed that on Google search results, there are two different URLs landing on same content page.

I've attached an image to explain what I saw.

Should I "no-index" the page url?

In this picture, the first result is the homepage and I try to rank for that page. The last result is landing on same content with different URL.

So, should I no-index last result as shown in image?

-

In any SEO plugin, you can go to edit the secondary article and in canonical URL you put the link to the home page.

-

@amanda5964 You can use canonical meta tag to tell google that those are the exact same pages. Google will index one of them which google choose best for the SERP.

-

Hi @amanda5964 actually could I ask if there is a reason for having these identical pages? You might want to consider simply combining the pages - i.e. deleting your sub page and redirecting to home if the content is identical.

-

I would not no-index. Typically it is more effective to use a canonical link from the secondary content to the main page you want the traffic directed to.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Who is correct - please help!

I have a website with a lot of product pages - often thousands of pages. As each of these pages is for a specific lease car they are often only fractionally different from other pages. The urls are too long, the H1 is often too long and the Title is often too long for "SEO best practice". And they do create duplication issues according to MOZ. Some people tell me to change them to noindex/nofollow whilst others tell me to leave them as they are as best not to hide from google crawler. Any advice will be gratefully received. Thanks for listening.

Technical SEO | | jlhitch0 -

Unsolved Using NoIndex Tag instead of 410 Gone Code on Discontinued products?

Hello everyone, I am very new to SEO and I wanted to get some input & second opinions on a workaround I am planning to implement on our Shopify store. Any suggestions, thoughts, or insight you have are welcome & appreciated! For those who aren't aware, Shopify as a platform doesn't allow us to send a 410 Gone Code/Error under any circumstance. When you delete or archive a product/page, it becomes unavailable on the storefront. Unfortunately, the only thing Shopify natively allows me to do is set up a 301 redirect. So when we are forced to discontinue a product, customers currently get a 404 error when trying to go to that old URL. My planned workaround is to automatically detect when a product has been discontinued and add the NoIndex meta tag to the product page. The product page will stay up but be unavailable for purchase. I am also adjusting the LD+JSON to list the products availability as Discontinued instead of InStock/OutOfStock.

Technical SEO | | BakeryTech

Then I let the page sit for a few months so that crawlers have a chance to recrawl and remove the page from their indexes. I think that is how that works?

Once 3 or 6 months have passed, I plan on archiving the product followed by setting up a 301 redirect pointing to our internal search results page. The redirect will send the to search with a query aimed towards similar products. That should prevent people with open tabs, bookmarks and direct links to that page from receiving a 404 error. I do have Google Search Console setup and integrated with our site, but manually telling google to remove a page obviously only impacts their index. Will this work the way I think it will?

Will search engines remove the page from their indexes if I add the NoIndex meta tag after they have already been index?

Is there a better way I should implement this? P.S. For those wondering why I am not disallowing the page URL to the Robots.txt, Shopify won't allow me to call collection or product data from within the template that assembles the Robots.txt. So I can't automatically add product URLs to the list.0 -

Good to use disallow or noindex for these?

Hello everyone, I am reaching out to seek your expert advice on a few technical SEO aspects related to my website. I highly value your expertise in this field and would greatly appreciate your insights.

Technical SEO | | williamhuynh

Below are the specific areas I would like to discuss: a. Double and Triple filter pages: I have identified certain URLs on my website that have a canonical tag pointing to the main /quick-ship page. These URLs are as follows: https://www.interiorsecrets.com.au/collections/lounge-chairs/quick-ship+black

https://www.interiorsecrets.com.au/collections/lounge-chairs/quick-ship+black+fabric Considering the need to optimize my crawl budget, I would like to seek your advice on whether it would be advisable to disallow or noindex these pages. My understanding is that by disallowing or noindexing these URLs, search engines can avoid wasting resources on crawling and indexing duplicate or filtered content. I would greatly appreciate your guidance on this matter. b. Page URLs with parameters: I have noticed that some of my page URLs include parameters such as ?variant and ?limit. Although these URLs already have canonical tags in place, I would like to understand whether it is still recommended to disallow or noindex them to further conserve crawl budget. My understanding is that by doing so, search engines can prevent the unnecessary expenditure of resources on indexing redundant variations of the same content. I would be grateful for your expert opinion on this matter. Additionally, I would be delighted if you could provide any suggestions regarding internal linking strategies tailored to my website's structure and content. Any insights or recommendations you can offer would be highly valuable to me. Thank you in advance for your time and expertise in addressing these concerns. I genuinely appreciate your assistance. If you require any further information or clarification, please let me know. I look forward to hearing from you. Cheers!0 -

Footer backlink for/to Web Design Agency

I read some old (10+ years) information on whether footer backlinks from the websites that design agencies build are seen as spammy and potentially cause a negative effect. We have over 150 websites that we have built over the last few years, all with sitewide footer backlinks back to our homepage (designed and managed by COMPANY NAME). Semrush flags some of the links as potential spammy links. What are the current thoughts on this type of footer backlink? Are we better to have 1 dofollow backlink and the rest of the website nofollow from each domain?

Link Building | | MultiAdE1 -

To hyphenate or not to hyphenate?

Quick question: does Google differentiate between terms that correctly include a hyphen (such as "royalty-free") and those that are incorrect ("royalty free")? I ask because the correct term "royalty-free"(with a hyphen) receives far less monthly traffic for the same term without the hyphen (according to Moz): Term | Estimated traffic

On-Page Optimization | | JCN-SBWD

"royalty free music" | 11.5-30.3K

"royalty-free music" | 501-850 If Moz views the terms separately then I'd guess that Google does too, in which case the best thing to do for SEO (and increased site traffic) would be to wrongly use "royalty free" without the hyphen. Is that correct?0 -

Does Google index internal anchors as separate pages?

Hi, Back in September, I added a function that sets an anchor on each subheading (h[2-6]) and creates a Table of content that links to each of those anchors. These anchors did show up in the SERPs as JumpTo Links. Fine. Back then I also changed the canonicals to a slightly different structur and meanwhile there was some massive increase in the number of indexed pages - WAY over the top - which has since been fixed by removing (410) a complete section of the site. However ... there are still ~34.000 pages indexed to what really are more like 4.000 plus (all properly canonicalised). Naturally I am wondering, what google thinks it is indexing. The number is just way of and quite inexplainable. So I was wondering: Does Google save JumpTo links as unique pages? Also, does anybody know any method of actually getting all the pages in the google index? (Not actually existing sites via Screaming Frog etc, but actual pages in the index - all methods I found sadly do not work.) Finally: Does somebody have any other explanation for the incongruency in indexed vs. actual pages? Thanks for your replies! Nico

Technical SEO | | netzkern_AG0 -

Why do some URLs for a specific client have "/index.shtml"?

Reviewing our client's URLs for a 301 redirect strategy, we have noticed that many URLs have "/index.shtml." The part we don'd understand is these URLs aren't the homepage and they have multiple folders followed by "/index.shtml" Does anyone happen to know why this may be occurring? Is there any SEO value in keeping the "/index.shtml" in the URL?

Technical SEO | | FranFerrara0 -

How is a dash or "-" handled by Google search?

I am targeting the keyword AK-47 and it the variants in search (AK47, AK-47, AK 47) . How should I handle on page SEO? Right now I have AK47 and AK-47 incorporated. So my questions is really do I need to account for the space or is Google handling a dash as a space? At a quick glance of the top 10 it seems the dash is handled as a space, but I just wanted to get a conformation from people much smarter then I at seomoz. Thanks, Jason

Technical SEO | | idiHost0