Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Dynamically-generated .PDF files, instead of normal pages, indexed by and ranking in Google

-

Hi,

I come across a tough problem. I am working on an online-store website which contains the functionlaity of viewing products details in .PDF format (by the way, the website is built on Joomla CMS), now when I search my site's name in Google, the SERP simply displays my .PDF files in the first couple positions (shown in normal .PDF files format: [PDF]...)and I cannot find the normal pages there on SERP #1 unless I search the full site domain in Google. I really don't want this! Would you please tell me how to figure the problem out and solve it. I can actually remove the corresponding component (Virtuemart) that are in charge of generating the .PDF files. Now I am trying to redirect all the .PDF pages ranking in Google to a 404 page and remove the functionality, I plan to regenerate a sitemap of my site and submit it to Google, will it be working for me? I really appreciate that if you could help solve this problem. Thanks very much.

Sincerely

SEOmoz Pro Member

-

Recently discovered this:

Indicate the canonical version of a URL by responding with the

Link rel="canonical"HTTP header. Addingrel="canonical"to theheadsection of a page is useful for HTML content, but it can't be used for PDFs and other file types indexed by Google Web Search. In these cases you can indicate a canonical URL by responding with theLink rel="canonical"HTTP header, like this (note that to use this option, you'll need to be able to configure your server).Link: <http: www.example.com="" downloads="" white-paper.pdf="">; rel="canonical"</http:>

Google currently supports these link header elements for Web Search only.

-http://support.google.com/webmasters/bin/answer.py?hl=en&answer=139394

-

I would consider either excluding the PDFs from the index with your robots.txt in conjunction with resubmitting your sitemap (which you're all over), or placing a text link at the bottom of each PDF pointing back to the HTML version of that page (which, all things being equal, should cause the HTML version of the page to rank instead). I am not sure about serving 404 headers to Google instead of the PDFs that are currently in the index. Why not 301 to the HTML version of each PDF? Obviously that can't be a permanent solution, as you will eventually want to restore the functionality to users, right? But it will tell Googlebot that the content of each PDF is to be found from here on out at the URL containing the HTML version. This is a case where it would be handy to serve one thing to the bots and another to the human viewers, but I am afraid that doing so could get you into trouble.

I am interested in your case though—let us know what, if anything besides the 404s and sitemap resubmittal, you end up trying and what happens with it. I'm also curious to know what other mozzers suggest.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Should search pages be indexed?

Hey guys, I've always believed that search pages should be no-indexed but now I'm wondering if there is an argument to index them? Appreciate any thoughts!

Technical SEO | | RebekahVP0 -

Indexing Issue of Dynamic Pages

Hi All, I have a query for which i am struggling to find out the answer. I unable to retrieve URL using "site:" query on Google SERP. However, when i enter the direct URL or with "info:" query then a snippet appears. I am not able to understand why google is not showing URL with "site:" query. Whether the page is indexed or not? Or it's soon going to be deindexed. Secondly, I would like to mention that this is a dynamic URL. The index file which we are using to generate this URL is not available to Google Bot. For instance, There are two different URL's. http://www.abc.com/browse/ --- It's a parent page.

Technical SEO | | SameerBhatia

http://www.abc.com/browse/?q=123 --- This is the URL, generated at run time using browse index file. Google unable to crawl index file of browse page as it is unable to run independently until some value will get passed in the parameter and is not indexed by Google. Earlier the dynamic URL's were indexed and was showing up in Google for "site:" query but now it is not showing up. Can anyone help me what is happening here? Please advise. Thanks0 -

Best practices for types of pages not to index

Trying to better understand best practices for when and when not use a content="noindex". Are there certain types of pages that we shouldn't want Google to index? Contact form pages, privacy policy pages, internal search pages, archive pages (using wordpress). Any thoughts would be appreciated.

Technical SEO | | RichHamilton_qcs0 -

Blog Page Titles - Page 1, Page 2 etc.

Hi All, I have a couple of crawl errors coming up in MOZ that I am trying to fix. They are duplicate page title issues with my blog area. For example we have a URL of www.ourwebsite.com/blog/page/1 and as we have quite a few blog posts they get put onto another page, example www.ourwebsite.com/blog/page/2 both of these urls have the same heading, title, meta description etc. I was just wondering if this was an actual SEO problem or not and if there is a way to fix it. I am using Wordpress for reference but I can't see anywhere to access the settings of these pages. Thanks

Technical SEO | | O2C0 -

Wordpress versus html and google ranking

My current SEO has always recommended that I take my site to wordpress. I really don't want to move to wordpress. I don't like it... I just like writing code in raw html, css, and script. I feel like I have more control that way. Wordpress just seems like a platform for blogs (I have my blog in wordpress). My question is, do wordpress websites typically rank better? Is there benefit to moving to it?

Technical SEO | | CalicoKitty20000 -

Investigating a huge spike in indexed pages

I've noticed an enormous spike in pages indexed through WMT in the last week. Now I know WMT can be a bit (OK, a lot) off base in its reporting but this was pretty hard to explain. See, we're in the middle of a huge campaign against dupe content and we've put a number of measures in place to fight it. For example: Implemented a strong canonicalization effort NOINDEX'd content we know to be duplicate programatically Are currently fixing true duplicate content issues through rewriting titles, desc etc. So I was pretty surprised to see the blow-up. Any ideas as to what else might cause such a counter intuitive trend? Has anyone else see Google do something that suddenly gloms onto a bunch of phantom pages?

Technical SEO | | farbeseo0 -

Image Indexing Issue by Google

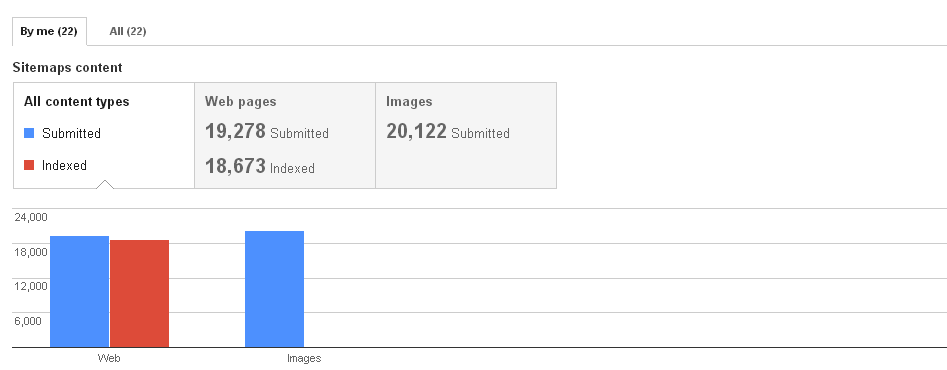

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

How Does Google's "index" find the location of pages in the "page directory" to return?

This is my understanding of how Google's search works, and I am unsure about one thing in specific: Google continuously crawls websites and stores each page it finds (let's call it "page directory") Google's "page directory" is a cache so it isn't the "live" version of the page Google has separate storage called "the index" which contains all the keywords searched. These keywords in "the index" point to the pages in the "page directory" that contain the same keywords. When someone searches a keyword, that keyword is accessed in the "index" and returns all relevant pages in the "page directory" These returned pages are given ranks based on the algorithm The one part I'm unsure of is how Google's "index" knows the location of relevant pages in the "page directory". The keyword entries in the "index" point to the "page directory" somehow. I'm thinking each page has a url in the "page directory", and the entries in the "index" contain these urls. Since Google's "page directory" is a cache, would the urls be the same as the live website (and would the keywords in the "index" point to these urls)? For example if webpage is found at wwww.website.com/page1, would the "page directory" store this page under that url in Google's cache? The reason I want to discuss this is to know the effects of changing a pages url by understanding how the search process works better.

Technical SEO | | reidsteven750