Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

How to create site map for large site (ecommerce type) that has 1000's if not 100,000 of pages.

-

I know this is kind of a newbie question but I am having an amazing amount of trouble creating a sitemap for our site Bestride.com. We just did a complete redesign (look and feel, functionality, the works) and now I am trying to create a site map. Most of the generators I have used "break" after reaching some number of pages. I am at a loss as to how to create the sitemap. Any help would be greatly appreciated!

Thanks

-

I agree with Chris. With such large websites it would be advisable having a sitemap index and then splitting the index into various individual indexes such as Pages, Products, Categories, images, media, tags etc.

-

The easiest thing i can think of is to write a script that works with your dispatcher to create a site map. The format I would use is add the page and all of the "product images" on the page to the map and move to the next. At the same time I would use an auto increment variable to keep track of how many lines you have written. When you get around 50k, write out the name of the next site map file that the program will create and have them chained together this way.

-

That's a great help Chris, thank you! And thanks to all for your help!

-

Typically, a sitemap is going to include every page on the site. As Francesca said, each sitemap can be up to 50K urls and if you need multiple sitemaps then you create a sitemap index that points to the rest of the sitemaps.

-

Thanks for the feedback!

I will look into screamingfrog for sure.

@Lesley - we are using a custom platform (in house) so we don't have that functionality. The issue is that we have a lot of inventory (millions) of cars. We have built (and are releasing new functionality today) to provide internal links so that Google can crawl all the inventory easily (users can too :). My question about sitemaps has boiled down to this: Do we need to build the sitemap to include every single page (all the inventory) or do we provide a "map" so that google can find the top pages and then crawl the inventory from there. Again the site is bestride.com. If anyone wants to take a look at the site, that would be fantastic!

Thanks

-

Are you using a custom platform or an off the shelf e-commerce package? Most off the shelf packages actually have a module that can create a site map and a lot have it where you can cron it too.

-

Of course, you can also use the moz's crawl test report at http://pro.a-moz.groupbuyseo.org/tools/crawl-test

-

Hi Kristin,

Each sitemap.xml can support maximum 50.000 URLs. So, If you have a site with more than 100K, It'd be better to create 2 or 3 o 4 etc sitemaps.xml in order to contain all URLs. Hope it is useful.

Kind regards!

Francesca

-

You can use screamingfrog to create your sitemap. You just need to license it for crawl more than 500 URI.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Does anyone know the linking of hashtags on Wix sites does it negatively or postively impact SEO. It is coming up as an error in site crawls 'Pages with 404 errors' Anyone got any experience please?

Does anyone know the linking of hashtags on Wix sites does it negatively or positively impact SEO. It is coming up as an error in site crawls 'Pages with 404 errors' Anyone got any experience please? For example at the bottom of this blog post https://www.poppyandperle.com/post/face-painting-a-global-language the hashtags are linked, but they don't go to a page, they go to search results of all other blogs using that hashtag. Seems a bit of a strange approach to me.

Technical SEO | | Mediaholix0 -

My Website's Home Page is Missing on Google SERP

Hi All, I have a WordPress website which has about 10-12 pages in total. When I search for the brand name on Google Search, the home page URL isn't appearing on the result pages while the rest of the pages are appearing. There're no issues with the canonicalization or meta titles/descriptions as such. What could possibly the reason behind this aberration? Looking forward to your advice! Cheers

Technical SEO | | ugorayan0 -

Best practices for types of pages not to index

Trying to better understand best practices for when and when not use a content="noindex". Are there certain types of pages that we shouldn't want Google to index? Contact form pages, privacy policy pages, internal search pages, archive pages (using wordpress). Any thoughts would be appreciated.

Technical SEO | | RichHamilton_qcs0 -

Spam URL'S in search results

We built a new website for a client. When I do 'site:clientswebsite.com' in Google it shows some of the real, recently submitted pages. But it also shows many pages of spam url results, like this 'clientswebsite.com/gockumamaso/22753.htm' - all of which then go to the sites 404 page. They have page titles and meta descriptions in Chinese or Japanese too. Some of the urls are of real pages, and link to the correct page, despite having the same Chinese page titles and descriptions in the SERPS. When I went to remove all the spammy urls in Search Console (it only allowed me to temporarily hide them), a whole load of new ones popped up in the SERPS after a day or two. The site files itself are all fine, with no errors in the server logs. All the usual stuff...robots.txt, sitemap etc seems ok and the proper pages have all been requested for indexing and are slowly appearing. The spammy ones continue though. What is going on and how can I fix it?

Technical SEO | | Digital-Murph0 -

Create Page Titles from H1 using Yoast?

I'm working on a site that has 280 blog posts that have either been migrated from an old CMS site or created on the Dev version of the new WordPress site. We've written 280 unique meta descriptions so they don't truncate but it there a quick way I can export the current H1s and then import them into Yoast so they are set as the Page Titles? I've written unique Page Titles and meta descriptions for all the Service and Products page and just want a way to speed up the blog posts as their H1s are really good and what I would use as Page Titles anyway. Any help, greatly appreciated!

Technical SEO | | Marketing_Today0 -

Should I noindex my blog's tag, category, and author pages

Hi there, Is it a good idea to no index tag, category, and author pages on blogs? The tag pages sometimes have duplicate content. And the category and author pages aren't really optimized for any search term. Just curious what others think. Thanks!

Technical SEO | | Rignite0 -

Does Title Tag location in a page's source code matter?

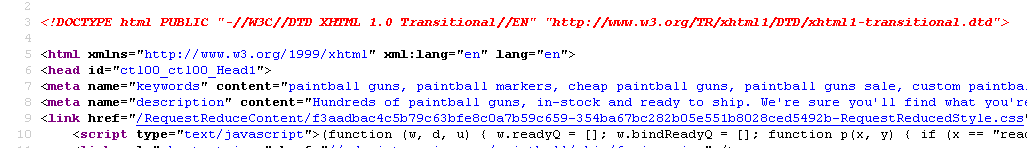

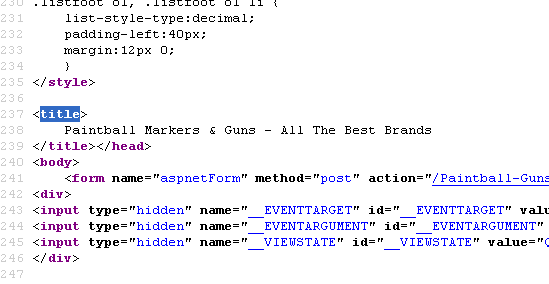

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

Same Video on Multiple Pages and Sites... Duplicate Issues?

We're rolling out quite a bit of pro video and hosting on a 3-party platform/player (likely BrightCove) that also allows us to have the URL reside on our domain. Here is a scenario for a particular video asset: A. It's on a product page that the video is relevant for. B. We have an entry on our blog with the video C. We have a separate section of our site "Video Library" that provides a centralized view of all videos. It's there too. D. We eventually give the video to other sites (bloggers, industry educational sites etc) for outreach and link-building. A through C on our domain are all for user experience as every page is very relevant, but are there any duplicate video issues here? We would likely only have the transcript on the product page (though we're open to suggestions). Any related feedback would be appreciated. We want to make this scalable and done properly from the beginning (will be rolling out 1000+ videos in 2010)

Technical SEO | | SEOPA0