Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

How to create site map for large site (ecommerce type) that has 1000's if not 100,000 of pages.

-

I know this is kind of a newbie question but I am having an amazing amount of trouble creating a sitemap for our site Bestride.com. We just did a complete redesign (look and feel, functionality, the works) and now I am trying to create a site map. Most of the generators I have used "break" after reaching some number of pages. I am at a loss as to how to create the sitemap. Any help would be greatly appreciated!

Thanks

-

I agree with Chris. With such large websites it would be advisable having a sitemap index and then splitting the index into various individual indexes such as Pages, Products, Categories, images, media, tags etc.

-

The easiest thing i can think of is to write a script that works with your dispatcher to create a site map. The format I would use is add the page and all of the "product images" on the page to the map and move to the next. At the same time I would use an auto increment variable to keep track of how many lines you have written. When you get around 50k, write out the name of the next site map file that the program will create and have them chained together this way.

-

That's a great help Chris, thank you! And thanks to all for your help!

-

Typically, a sitemap is going to include every page on the site. As Francesca said, each sitemap can be up to 50K urls and if you need multiple sitemaps then you create a sitemap index that points to the rest of the sitemaps.

-

Thanks for the feedback!

I will look into screamingfrog for sure.

@Lesley - we are using a custom platform (in house) so we don't have that functionality. The issue is that we have a lot of inventory (millions) of cars. We have built (and are releasing new functionality today) to provide internal links so that Google can crawl all the inventory easily (users can too :). My question about sitemaps has boiled down to this: Do we need to build the sitemap to include every single page (all the inventory) or do we provide a "map" so that google can find the top pages and then crawl the inventory from there. Again the site is bestride.com. If anyone wants to take a look at the site, that would be fantastic!

Thanks

-

Are you using a custom platform or an off the shelf e-commerce package? Most off the shelf packages actually have a module that can create a site map and a lot have it where you can cron it too.

-

Of course, you can also use the moz's crawl test report at http://pro.a-moz.groupbuyseo.org/tools/crawl-test

-

Hi Kristin,

Each sitemap.xml can support maximum 50.000 URLs. So, If you have a site with more than 100K, It'd be better to create 2 or 3 o 4 etc sitemaps.xml in order to contain all URLs. Hope it is useful.

Kind regards!

Francesca

-

You can use screamingfrog to create your sitemap. You just need to license it for crawl more than 500 URI.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

I want to load my ecommerce site xml via CDN

Hello Experts. My ecommerce site - abcd.com

Technical SEO | | micey123

My ecommrece site sitemap abcd.com/sitemap.xml

My subdomain - xyz.abcd.com ( this is blank page but status is 200 which runs from cdn) My ecommerce site sitemap abcd.com/sitemap.xml contains only 1 link of subdomain sitemap- xyz.abcd.com/sitemap.xml

And this sitemap- xyz.abcd.com/sitemap.xml contains all category and product links of abcd.com So my query is :- Above configuration is okay? In search console I will add new property - xyz.abcd.com. and add sitemap xyz.abcd.com/sitemap.xml So Google will able to give errors for my website abcd.com Purpose - I want to run my xml sitemap from cdn that's why i have created subdomain like xyz.abcd.com Hope you understood my query. Thanks!0 -

Unique page for each product variant? (Not eCommerce)

Hi Mozzers, Just looking for a little advice before I launch into a huge workload. We have landing pages for vehicle manufacturers. We then have anchor links in that page for each vehicle model that manufacturer has, with further info on the model further down the page. So we're toying with the idea of launching a unique page for each of the models rather than having them all on the same landing page. This will take an age and a minute but if it is worth it, we want to do it. Do you guys see a benefit to having unique pages for each model? Do you think it would attract more natural links? Would this help or hinder the manufacturer landing page in general? Should the manufacturer landing page be noindex so as to avoid duplicate content issues? I can see a lot of work and risk, just looking for a few opinions. PM for more info. Thanks a lot people, Jamie

Technical SEO | | SanjidaKazi0 -

Does Title Tag location in a page's source code matter?

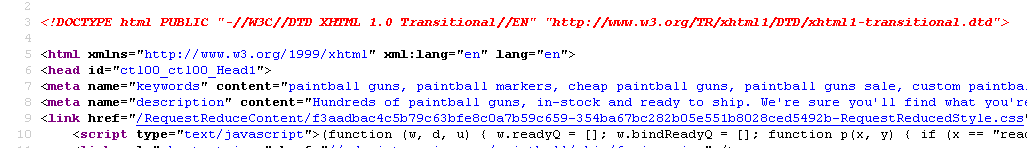

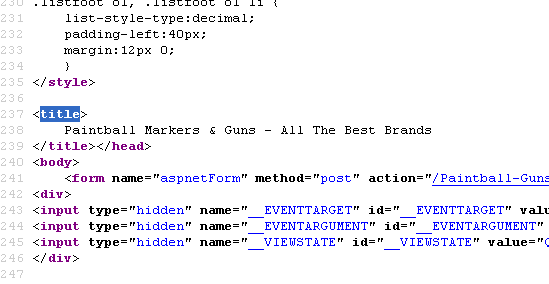

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

How Does Google's "index" find the location of pages in the "page directory" to return?

This is my understanding of how Google's search works, and I am unsure about one thing in specific: Google continuously crawls websites and stores each page it finds (let's call it "page directory") Google's "page directory" is a cache so it isn't the "live" version of the page Google has separate storage called "the index" which contains all the keywords searched. These keywords in "the index" point to the pages in the "page directory" that contain the same keywords. When someone searches a keyword, that keyword is accessed in the "index" and returns all relevant pages in the "page directory" These returned pages are given ranks based on the algorithm The one part I'm unsure of is how Google's "index" knows the location of relevant pages in the "page directory". The keyword entries in the "index" point to the "page directory" somehow. I'm thinking each page has a url in the "page directory", and the entries in the "index" contain these urls. Since Google's "page directory" is a cache, would the urls be the same as the live website (and would the keywords in the "index" point to these urls)? For example if webpage is found at wwww.website.com/page1, would the "page directory" store this page under that url in Google's cache? The reason I want to discuss this is to know the effects of changing a pages url by understanding how the search process works better.

Technical SEO | | reidsteven750 -

Ecommerce website: Product page setup & SKU's

I manage an E-commerce website and we are looking to make some changes to our product pages to try and optimise them for search purposes and to try and improve the customer buying experience. This is where my head starts to hurt! Now, let's say I am selling a T shirt that comes in 4 sizes and 6 different colours. At the moment my website would have 24 products, each with pretty much the same content (maybe differing references to the colour & size). My idea is to change this and have 1 main product page for the T-shirt, but to have 24 product SKU's/variations that exist to give the exact product details. Some different ways I have been considering to do this: a) have drop-down fields on the product page that ask the customer to select their Tshirt size and colour. The image & price then changes on the page. b) All product 24 product SKUs sre listed under the main product with the 'Add to Cart' open next to each one. Each one would be clickable so a page it its own right. Would I need to set up a canonical links for each SKU that point to the top level product page? I'm obviously looking to minimise duplicate content but Im not exactly sure on how to set this up - its a big decision so I need to be 100% clear before signing off on anything. . Any other tips on how to do this or examples of good e-commerce websites that use product SKus well? Kind regards Tom

Technical SEO | | DHS_SH0 -

Structuring URL's for better SEO

Hello, We were rolling our fresh urls for our new service website. Currently we have our structure as www.practo.com/health/dental/clinic/bangalore We like to have it as www.practo.com/health/dental-clinic-bangalore Can someone advice us better which one of the above structure would work out better and why? Should this be a focus of attention while going ahead since this is like a search engine platform for patients looking out for actual doctors. Thanks, Aditya

Technical SEO | | shanky10 -

Duplicate page titles on Ecommerce

Hi, My question is in reference to an E-commerce site- Our SEO MOZ scan is showing many errors for Duplicates- such as Duplicate titles - The majority of these are on the products map- and the page titles are Products Map :: Company Name How do we get correct this or does Google not penalize for it? Thanks.

Technical SEO | | frankrizzo0 -

How would you create and then segment a large sitemap?

I have a site with around 17,000 pages and would like to create a sitemap and then segment it into product categories. Is it best to create a map and then edit it in something like xmlSpy or is there a way to silo sitemap creation from the outset?

Technical SEO | | SystemIDBarcodes0