Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

What are best options for website built with navigation drop-down menus in JavaScript, to get those menus indexed by Google?

-

This concerns f5.com, a large website with navigation menus that drop down when hovered over. The sub nav items (example: “DDoS Protection”) are not cached by Google and therefore do not distribute internal links properly to help those sub-pages rank well.

Best option naturally is to change the nav menus from JS to CSS but barring that, is there another option? Will Schema SiteNavigationElement work as an alternate?

-

Meh, I guess not.

It's just like talking about it to clients or friends. I've made some fine noise with lots of technical words.

It's just like talking about it to clients or friends. I've made some fine noise with lots of technical words. -

Hi Carl - Did you see Travis' thoughtful response to your question?

-

I would generally prefer CSS over JS for navigational elements, but that probably isn't the problem here. Google can crawl JavaScript and attribute links fine. And per SEM Rush, it looks like the site is enjoying a pretty sharp uptick in organic traffic recently. That would seem to be at odds with big indexation problems.

I'm not so sure if it's my network, I'm on a sub par connection now, but I noticed that some CSS and JS files were timing out when I crawled the site. That could lead to a big problem. I would advise that someone check the server log files and see if those files are regularly timing out. Ideally one would want their CSS and JS files combined/concatenated where possible, to reduce the possibility of any such rendering issues.

More on that from SE Roundtable

I checked the cache for the EN version of a few of those pages, and they appear to be cached fine.

cache:https://f5.com/products/security/distributed-denial-of-service-ddos-protection yields, which is pretty much what we want.

But I do see some problems that could lead to problems with indexation/display. The site has a number of different languages/translations. However, I noticed that the hreflang attribute was missing. It's strongly recommended that hreflang is implemented. You're good on the language meta tag Bing recommends, though.

That would cause some problems, especially on a site that large. I've researched Radware, their competitor, years ago. F5 seems like the type of organization that would pay for a decent translation. (my German and Spanish are so limited, I couldn't discern the quality of the translations) But if it is automatically generated, that would more than likely lead to indexation problems as well.

Another thing I see is that each translation is marked as canonical. This could also cause problems with display and link equity.

Here's more on internationalization from Moz and Google.

I would also look for ways to build internal links to the important products (DDoS Mitigation is supposed to be a huge money maker now.) on the home page, in the body. Not just in boilerplate (nav... footer... etc....) areas.

Edit: Forgot to mention that the mobile menu doesn't appear to directly link important products. I would make sure the experience is the same across devices.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

How to check if an individual page is indexed by Google?

So my understanding is that you can use site: [page url without http] to check if a page is indexed by Google, is this 100% reliable though? Just recently Ive worked on a few pages that have not shown up when Ive checked them using site: but they do show up when using info: and also show their cached versions, also the rest of the site and pages above it (the url I was checking was quite deep) are indexed just fine. What does this mean? thank you p.s I do not have WMT or GA access for these sites

Technical SEO | | linklander0 -

Removed Subdomain Sites Still in Google Index

Hey guys, I've got kind of a strange situation going on and I can't seem to find it addressed anywhere. I have a site that at one point had several development sites set up at subdomains. Those sites have since launched on their own domains, but the subdomain sites are still showing up in the Google index. However, if you look at the cached version of pages on these non-existent subdomains, it lists the NEW url, not the dev one in the little blurb that says "This is Google's cached version of www.correcturl.com." Clearly Google recognizes that the content resides at the new location, so how come the old pages are still in the index? Attempting to visit one of them gives a "Server Not Found" error, so they are definitely gone. This is happening to a couple of sites, one that was launched over a year ago so it doesn't appear to be a "wait and see" solution. Any suggestions would be a huge help. Thanks!!

Technical SEO | | SarahLK0 -

Image Indexing Issue by Google

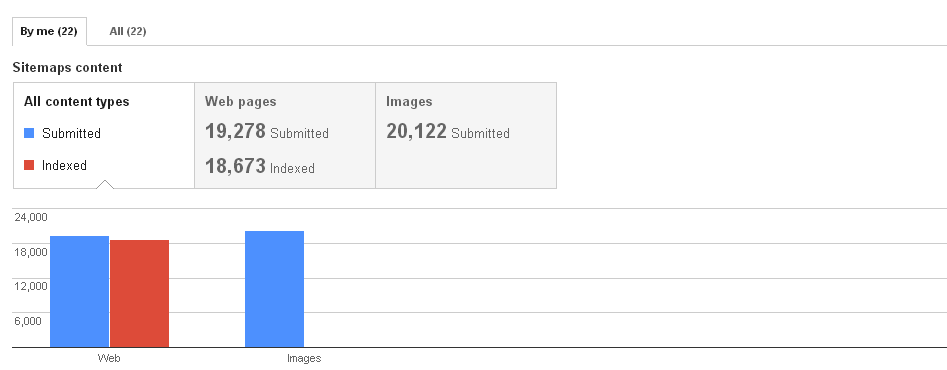

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

Staging site and "live" site have both been indexed by Google

While creating a site we forgot to password protect the staging site while it was being built. Now that the site has been moved to the new domain, it has come to my attention that both the staging site (site.staging.com) and the "live" site (site.com) are both being indexed. What is the best way to solve this problem? I was thinking about adding a 301 redirect from the staging site to the live site via HTACCESS. Any recommendations?

Technical SEO | | melen0 -

Pages removed from Google index?

Hi All, I had around 2,300 pages in the google index until a week ago. The index removed a load and left me with 152 submitted, 152 indexed? I have just re-submitted my sitemap and will wait to see what happens. Any idea why it has done this? I have seen a drop in my rankings since. Thanks

Technical SEO | | TomLondon0 -

CDN Being Crawled and Indexed by Google

I'm doing a SEO site audit, and I've discovered that the site uses a Content Delivery Network (CDN) that's being crawled and indexed by Google. There are two sub-domains from the CDN that are being crawled and indexed. A small number of organic search visitors have come through these two sub domains. So the CDN based content is out-ranking the root domain, in a small number of cases. It's a huge duplicate content issue (tens of thousands of URLs being crawled) - what's the best way to prevent the crawling and indexing of a CDN like this? Exclude via robots.txt? Additionally, the use of relative canonical tags (instead of absolute) appear to be contributing to this problem as well. As I understand it, these canonical tags are telling the SEs that each sub domain is the "home" of the content/URL. Thanks! Scott

Technical SEO | | Scott-Thomas0 -

How to remove a sub domain from Google Index!

Hello, I have a website having many subdomains having same copy of content i think its harming my SEO for that site since abc and xyz sub domains do have same contents. Thus i require to know i have already deleted required subdomain DNS RECORDS now how to have those pages removed from Google index as well ? The DNS Records no more exists for those subdomains already.

Technical SEO | | anand20100 -

How to use overlays without getting a Google penalty

One of my clients is an email subscriber-led business offering deals that are time sensitive and which expire after a limited, but varied, time period. Each deal is published on its own URL and in order to drive subscriptions to the email, an overlay was implemented that would appear over the individual deal page so that the user was forced to subscribe if they wished to view the details of the deal. Needless to say, this led to the threat of a Google penalty which _appears (fingers crossed) _to have been narrowly avoided as a result of a quick response on our part to remove the offending overlay. What I would like to ask you is whether you have any safe and approved methods for capturing email subscribers without revealing the premium content to users before they subscribe? We are considering the following approaches: First Click Free for Web Search - This is an opt in service by Google which is widely used for this sort of approach and which stipulates that you have to let the user see the first item they click on from the listings, but can put up the subscriber only overlay afterwards. No Index, No follow - if we simply no index, no follow the individual deal pages where the overlay is situated, will this remove the "cloaking offense" and therefore the risk of a penalty? Partial View - If we show one or two paragraphs of text from the deal page with the rest being covered up by the subscribe now lock up, will this still be cloaking? I will write up my first SEOMoz post on this once we have decided on the way forward and monitored the effects, but in the meantime, I welcome any input from you guys.

Technical SEO | | Red_Mud_Rookie0