Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Google Indexed a version of my site w/ MX record subdomain

-

We're doing a site audit and found "internal" links to a page in search console that appear to be from a subdomain of our site based on our MX record. We use Google Mail internally. The links ultimately redirect to our correct preferred subdomain "www", but I am concerned as to why this is happening and if it can have any negative SEO implications.

Example of one of the links:

Links aspmx3.googlemail.com.sullivansolarpower.com/about/solar-power-blog/daniel-sullivan/renewable-energy-and-electric-cars-are-not-political-footballs I did a site operator search, site:aspmx3.googlemail.com.sullivansolarpower.com on google and it returns several results.

-

You appear to have the MX sub-domain also set up as an A record.

If you have a mac / linux you can run the command: host aspmx3.googlemail.com.sullivansolarpower.com

You get the result aspmx3.googlemail.com.sullivansolarpower.com has address 72.10.48.198

Where you should get the result "not found".

I think you want to delete the A record (though check the documentation of your email provider first). You should only need them set up as MX records and shouldn't need the A record.

You've done the right thing by setting up the redirect - which should mean that the pages drop out of the index and those links disappear. (Note that there is also an https error on the aspmx3 sub-domain - but given that you don't actually want it, I don't suppose that matters that much).

Hope that helps.

-

I did not explain the problem thoroughly. The problem is, the link does not actually exist anywhere. To make a very long story short. There was an issue with server configuration for a period of a couple months. During that time, an unknown number of non-existent subdomains got indexed. Basically, if anyone had a typo in the subdomain when accessing our site, it would get cached and if Google crawled our site before we cleared the cache, the typo subdomain would get indexed. Over a period of a couple months, many bad subdomains were accidentally created and indexed by Google. We do not have any way of finding a comprehensive list of all of them. This problem has been resolved so we are not getting new bad subdomains created and indexed, but the damage has been done.

The way our site is setup currently, any attempt to reach our site with any subdomain other than "www" gets redirected to "www.sullivan..." Also, any nonsecure protocol gets resolved to https://

The actual problem, simply put is this: Google has an index which includes some number of unknown, non existent subdomains. We need to get rid of them and cannot figure out how.

Example: Copy and paste the following into google and search it:

site:aspmx3.googlemail.com.sullivansolarpower.com

Google will return two results. If you click on either, it resolves to the "https://www. version of the page.

I know it is confusing, but does that make sense? I have searched everywhere, but the reason this happened was because of a perfect storm of server configuration issues and I cannot find anyone else who has had the same problem.

If it were one or two bad subdomains, we would just put them into search console and then get "remove URL" for the entire subdomain. But it is not 1 or 2. It is at least 10 that I know of and could be hundreds for all I know.

Does anyone have any ideas? Any and all would be welcome.

Thank you.

-

You should find the locations of those links and correct them to point to the proper URL. I find that Screaming Frog's crawl is the easiest for this, you can find every link and see where they are located.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Site Audit Tools Not Picking Up Content Nor Does Google Cache

Hi Guys, Got a site I am working with on the Wix platform. However site audit tools such as Screaming Frog, Ryte and even Moz's onpage crawler show the pages having no content, despite them having 200 words+. Fetching the site as Google clearly shows the rendered page with content, however when I look at the Google cached pages, they also show just blank pages. I have had issues with nofollow, noindex on here, but it shows the meta tags correct, just 0 content. What would you look to diagnose? I am guessing some rogue JS but why wasn't this picked up on the "fetch as Google".

Technical SEO | | nezona0 -

Not all images indexed in Google

Hi all, Recently, got an unusual issue with images in Google index. We have more than 1,500 images in our sitemap, but according to Search Console only 273 of those are indexed. If I check Google image search directly, I find more images in index, but still not all of them. For example this post has 28 images and only 17 are indexed in Google image. This is happening to other posts as well. Checked all possible reasons (missing alt, image as background, file size, fetch and render in Search Console), but none of these are relevant in our case. So, everything looks fine, but not all images are in index. Any ideas on this issue? Your feedback is much appreciated, thanks

Technical SEO | | flo_seo1 -

Google not Indexing images on CDN.

My URL is: https://bit.ly/2hWAApQ We have set up a CDN on our own domain: https://bit.ly/2KspW3C We have a main xml sitemap: https://bit.ly/2rd2jEb and https://bit.ly/2JMu7GB is one the sub sitemaps with images listed within. The image sitemap uses the CDN URLs. We verified the CDN subdomain in GWT. The robots.txt does not restrict any of the photos: https://bit.ly/2FAWJjk. Yet, GWT still reports none of our images on the CDN are indexed. I ve followed all the steps and still none of the images are being indexed. My problem seems similar to this ticket https://bit.ly/2FzUnBl but however different because we don't have a separate image sitemap but instead have listed image urls within the sitemaps itself. Can anyone help please? I will promptly respond to any queries. Thanks

Technical SEO | | TNZ

Deepinder0 -

Site indexed by Google, but (almost) never gets impressions

Hi there, I have a question that I wasn't able to give it a reasonable answer yet, so I'm going to trust on all of you. Basically a site has all its pages indexed by Google (I verified with site:sitename.com) and it also has great and unique content. All on-page grades are A with absolutely no negative factors at all. However its pages do not get impressions almost at all. Of course I didn't expect it to be on page 1 since it has been launched on Dec, 1st, but it looks like Google is ignoring (or giving it bad scores) for some reason. Only things that can contribute to that could be: domain privacy on the domain, redirect from the www to the subdomain we use (we did this because it will be a multi-language site, so we'll assign to each country a subdomain), recency (it has been put online on Dec 1st and the domain is just a couple of months old). Or maybe because we blocked crawlers for a few days before the launch? Exactly a few days before Dec 1st. What do you think? What could be the reason for that? Thanks guys!

Technical SEO | | ruggero0 -

Image Indexing Issue by Google

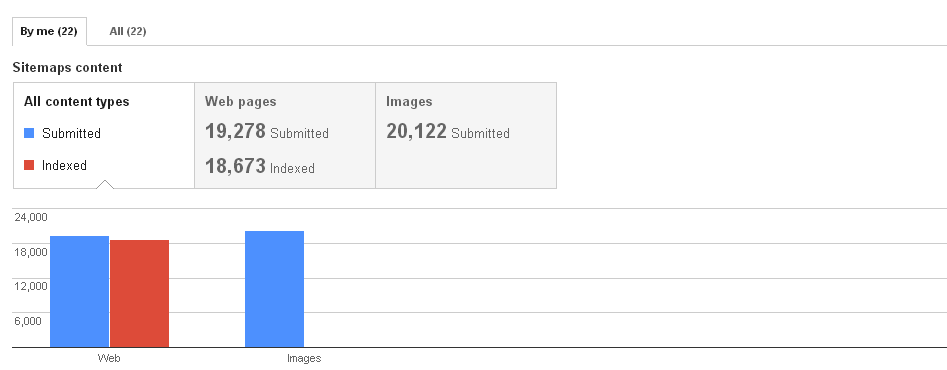

Hello All,My URL is: www.thesalebox.comI have Submitted my image Sitemap in google webmaster tool on 10th Oct 2013,Still google could not indexing any of my web images,Please refer my sitemap - www.thesalebox.com/AppliancesHomeEntertainment.xml and www.thesalebox.com/Hardware.xmland my webmaster status and image indexing status are below,

Technical SEO | | CommercePundit Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0

Can you please help me, why my images are not indexing in google yet? is there any issue? please give me suggestions?Thanks!

0 -

NoIndex/NoFollow pages showing up when doing a Google search using "Site:" parameter

We recently launched a beta version of our new website in a subdomain of our existing site. The existing site is www.fonts.com with the beta living at new.fonts.com. We do not want Google to crawl the new site until it's out of beta so we have added the following on all pages: However, one of our team members noticed that google is displaying results from new.fonts.com when doing an "site:new.fonts.com" search (see attached screenshot). Is it possible that Google is indexing the content despite the noindex, nofollow tags? We have double checked the syntax and it seems correct except the trailing "/". I know Google still crawls noindexed pages, however, the fact that they're showing up in search results using the site search syntax is unsettling. Any thoughts would be appreciated! DyWRP.png

Technical SEO | | ChrisRoberts-MTI0 -

How do I redirect index.html to the root / ?

The site I've inherited had operated on index.html at one point, and now uses index.php for the home page, which goes to the / page. The index.html was lost in migrating server hosts. How do I redirect the index.html to the / page? I've tried different options that keep giving ending up with the same 404 error. I tried a redirect from index.html to index.php which ended in an infinite loop. Because the index.html no longer exists in the root, should I created it and then add a redirect to it? Can I avoid this by editing the .htaccess? Any help is appreciated, thanks in advance!

Technical SEO | | NetPicks0 -

Index forum sites

Hi Moz Team, somehow the last question i raised a few days ago not only wasnt answered up until now, it was also completely deleted and the credit was not "refunded" - obviously there was some data loss involved with your restructuring. Can you check whether you still find the last question and answer it quickly? I need the answer 🙂 Here is one more question: I bought a website that has a huge forum, loads of pages with user generated content. Overall around 500.000 Threads with 9 Million comments. The complete forum is noindex/nofollow when i bought the site, now i am thinking about what is the best way to unleash the potential. The current system is vBulletin 3.6.10. a) Shall i first do an update of vbulletin to version 4 and use the vSEO tool to make the URLs clean, more user and search engine friendly before i switch to index/follow? b) would you recommend to have the forum in the folder structure or on a subdomain? As far as i know subdomain does take lesser strenght from the TLD, however, it is safer because the subdomain is seen as a separate entity from the regular TLD. Having it in he folder makes it easiert to pass strenght from the TLD to the forum, however, it puts my TLD at risk c) Would you release all forum sites at once or section by section? I think section by section looks rather unnatural not only to search engines but also to users, however, i am afraid of blasting more than a millionpages into the index at once. d) Would you index the first page of a threat or all pages of a threat? I fear duplicate content as the different pages of the threat contain different body content but the same Title and possibly the same h1. Looking forward to hear from you soon! Best Fabian

Technical SEO | | fabiank0