In August 2024, Google published a patent titled "Search With Stateful Chat." The patent outlines Google’s plans to bake conversational memory into search. Michael King, founder of iPullRank, was one of the first to spotlight this patent with a technical breakdown that got the industry buzzing.

The patent describes:

- A system that remembers what you’ve searched for before

- Then builds a persistent user state

- And uses large language models (LLMs) to guide you through multi-step search journeys

In my opinion, Google’s not just providing a better search experience; it's a fundamental restructuring that could dismantle the open web as we know it.

I know that sounds extreme. But I read the full patent, and in this op-ed, I’ll unpack what Google’s really building, and how it forces us to rethink content creation for a memory-driven, probabilistic search engine.

The problem Google is trying to solve (and create at the same time)

Before we get into the technical mechanics of the patent, let’s talk about why Google is reinventing search in the first place.

Google says it wants to make search faster and effortless, but there’s more to it.

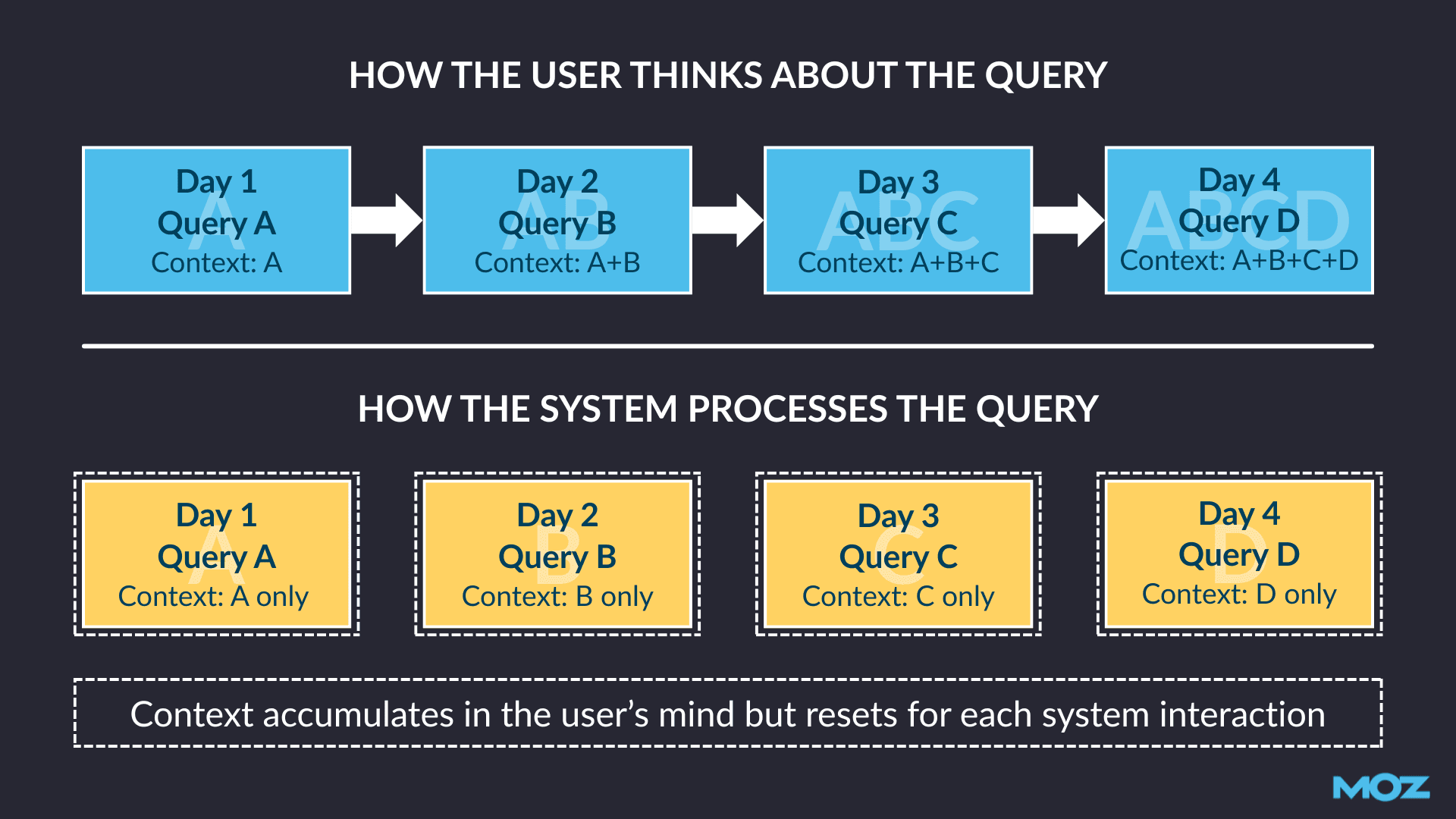

It’s really about the fundamental mismatch between how humans look for information and how traditional search engines are built to respond.

Let’s use an example, like buying a camera for photography.

Traditional search assumes users type something like “best cameras 2025,” skim the results, click, and move on.

But the real search behavior looks more like this:

- Day 1, on a laptop at work: “Best cameras for beginners 2025”

- Same day, over lunch: “Which is better—Canon E0S R50 vs Sony a6400? ”

- That evening, on their phone: “Canon E0S R50 image quality reviews ”

- Three days later, at home after watching a TikTok video: “Is Canon EOS R50 good for video recording and YouTube content? ”

One week later, after checking their budget: “Cheaper alternative to Canon E0S R50” or “Discount coupon for Canon E0S R50 ”

This is how real people search—fragmented across days, devices, moods, and mental models. Each search builds on the last, but traditional search treats them like isolated one-offs.

Users have to manually carry over what they’ve learned, synthesize across tabs and sources, and re-establish context every time they search.

Chima talked about this fragmented search experience in a previous article that you should definitely read.

Google's attempt to fix this is to engineer a system that remembers what you’ve already searched, predicts what you’ll need next, and walks you through complex decisions with AI support.

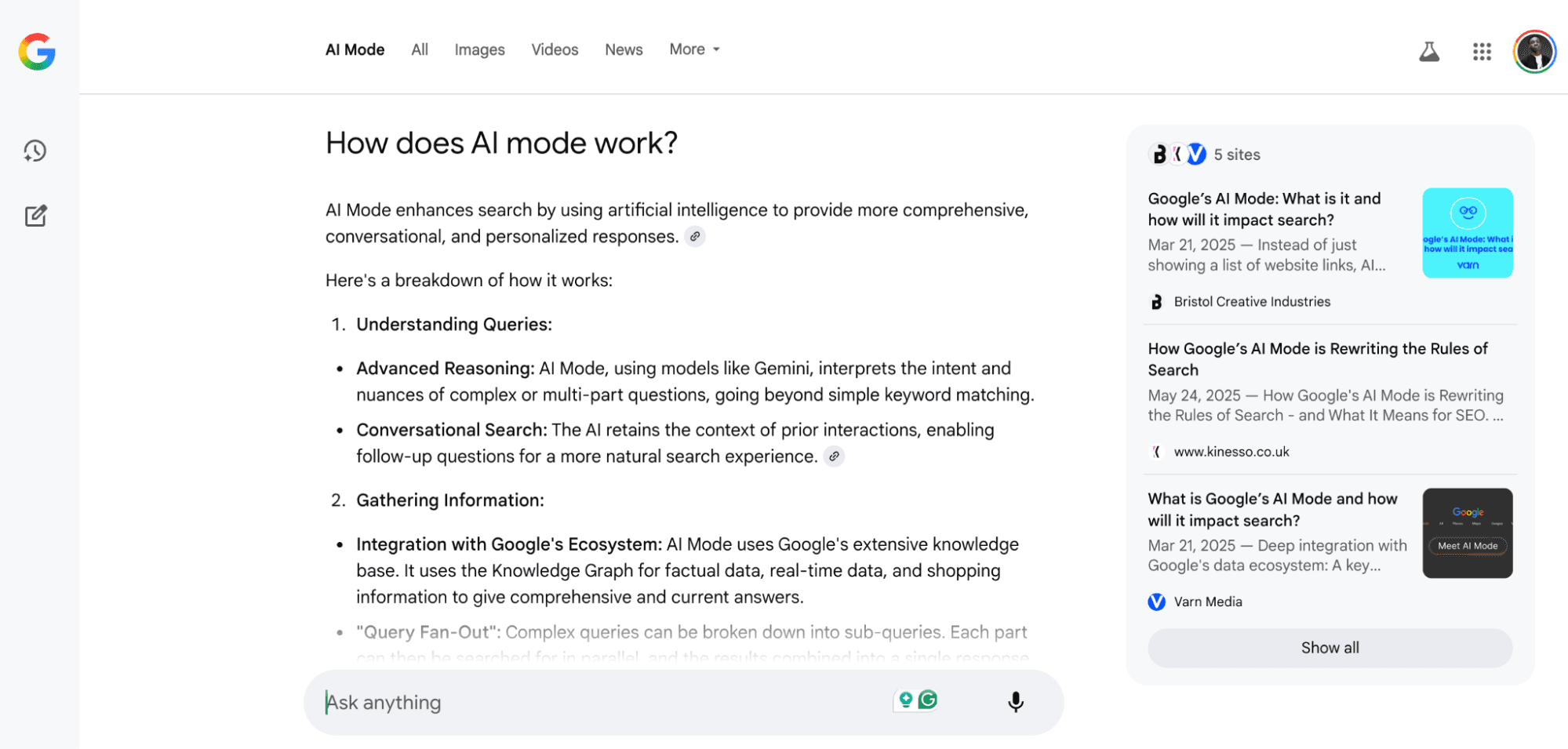

That system is AI Mode.

Announced publicly in Early 2025, AI Mode builds on AI Overviews but adds persistent memory and reasoning. It guides users through multi-step journeys by tracking query history and adapting to evolving context.

However, Google isn't just changing how search works; they are changing who controls information flow and how economic value is distributed across the entire web ecosystem.

With AI mode, Google becomes the web instead of a directional guide pointing people to the right resources. Publishers create content, Google's AI synthesizes it into answers, and users get what they need without having to click through.

SEOs and publishers bear the costs of research and content production while Google stands to capture more ad value on zero-click experiences.

And yes, they’ll monetize it.

They’re giving you attribution links in AI search features, but fewer people are clicking to your website.

It’s a 2-for-1, really. Google is fixing search while consolidating web traffic and economic power to make it harder for everyone else to compete.

The rest of this article explains the mechanisms that make that possible and why the shift might be permanent.

My seven biggest takeaways from analyzing Google AI mode patent

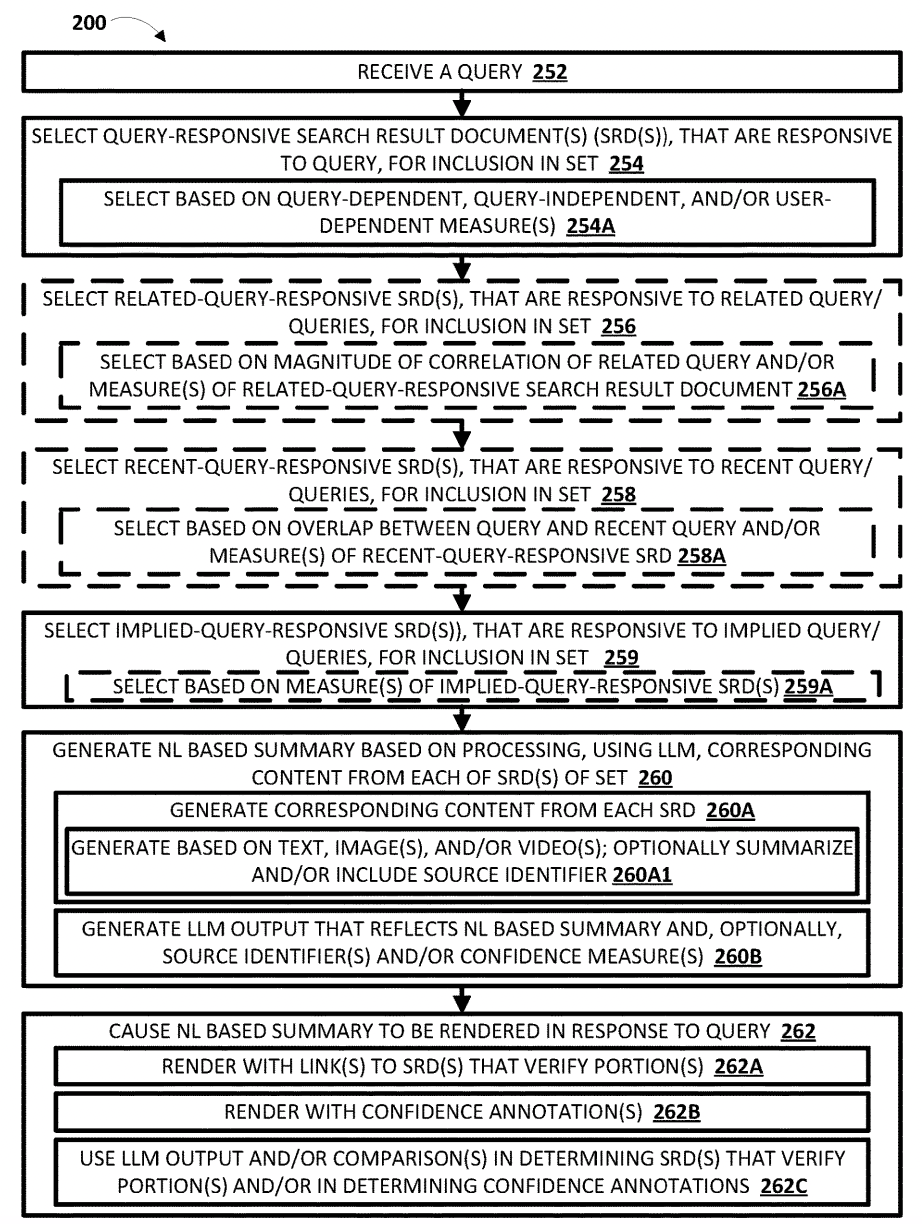

This flowchart from the patent lays out the core architecture of AI Mode. It shows how Google turns every search into an AI-mediated experience.

Image source: Search with stateful chat patent

Strip away the technical jargon, and here’s how it works:

When you enter a query, the system looks for documents matching that query, plus documents relevant to:

- Related queries

- Recent queries

- Implied queries based on what Google thinks the user needs

These documents are processed through LLMs to generate natural language (NL) summaries. Google then determines which parts of the summaries can be “verified” by specific source documents and creates attribution links accordingly.

To understand this shift, we need to unpack the seven core mechanisms driving it. These are the pillars of a new information retrieval paradigm where content isn’t read but interpreted, summarized, and cited (if you’re lucky).

For reference, I’ve included annotated screenshots from the patent showing the exact paragraphs behind each takeaway.

1. Search is becoming conversational with memory

Image source: Search with stateful chat patent

In plain English, Google is turning search into a conversation with an AI assistant that remembers.

This assistant filters, curates, and synthesizes search results based on an evolving understanding of your context. According to the patent, the system maintains a “contextual state of a user across multiple turns of a chat search session.”

Google’s generative companion keeps track of:

- What you’ve already asked

- What it showed you in previous search results

- What you engaged with

This persistent memory means your next result isn’t just a response to your latest query, but shaped by your full search journey.

There are big implications for content. Your blog post or product page isn’t competing for a single keyword, but is judged as part of a longer narrative within a sequence of past searches, user behavior, and prior AI responses.

If your content doesn’t fit the user's ongoing state, it might not appear at all. While traditional ranking signals still matter, contextual fit with the user’s journey is now baked into an expanded, invisible query we can’t see. It could imply optimizing blind against that hidden complexity.

This positions Google from a search engine to a dynamic gatekeeper that decides in real time what deserves to be shown, based on memory and context, not just query match.

2. Google is embedding your intent as a permanent state

Image source: Search with stateful chat patent

If you’re not familiar with embeddings, think of them as mathematical representations of meaning. Instead of storing your literal search history, Google converts your behavior into numbers that capture relationships between concepts.

Basically, it’s search history as vector math. This is a direct application of semantic search, and it’s not brand new. Folks like Dan Hinckley have shown how Open AI’s patent highlights the importance of semantic SEO to chunk content, embed it into vector space, and match it against intent.

What’s new is how Google applies it to users themselves. Each person ends up with a kind of semantic fingerprint, similar to a dynamic, multidimensional snapshot that includes explicit queries, implicit signals, and past interactions.

A user is no longer just a single query, but a constantly evolving semantic embedding that represents Google's holistic understanding of their intent, context, and knowledge.

Yes, it’s giving The Matrix.

It introduces a new level of personalization and surveillance where two users can ask the same question and get completely different answers based on Google’s mathematical model of who they are as information seekers.

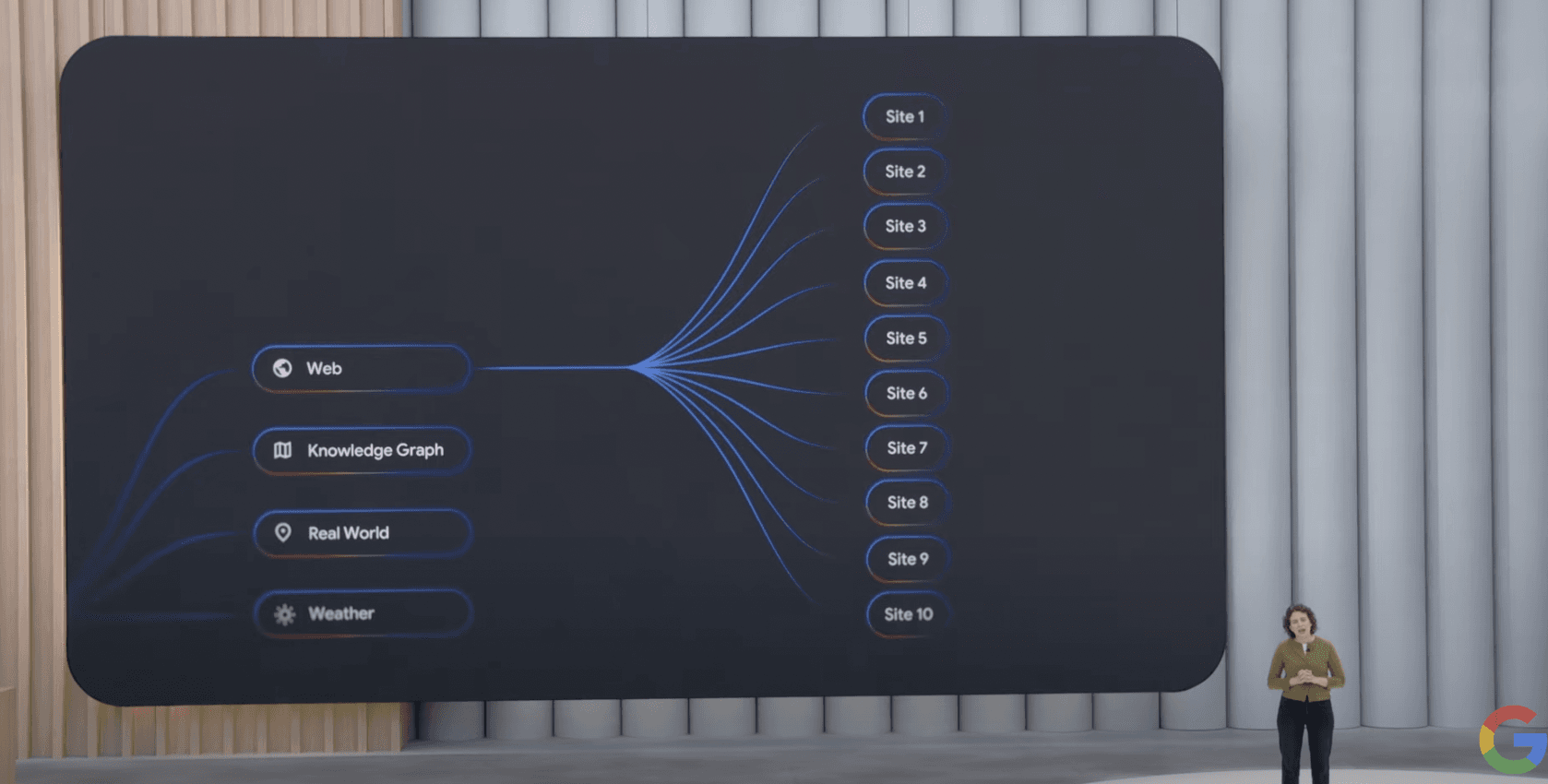

3. Synthetic queries mean you’re competing with invisible searches

At Google I/O 2025, they introduced what they call their "query fan-out technique."

As Liz Reid, VP and Head of Search, explained:

“Under the hood, AI Mode uses our query fan-out technique, breaking down your question into subtopics and issuing a multitude of queries simultaneously on your behalf.”

Image source: Search with stateful chat patent

It confirms what the patent described regarding query expansion. Google's system generates additional queries using LLMs that function as:

- Alternative formulations

- Follow-up questions

- Rewritten versions

- Drill-down queries created in real-time based on your original query and contextual information

Your content isn’t just being evaluated against what the user typed. It’s being judged against a multitude of queries that enable Search to dive deeper into the web than a traditional search on Google.

This flips everything we know about SEO on its head because you’re no longer optimizing for literal search inputs but what Google’s AI thinks the user might mean, want next, or need clarified via its fan-out technique.

Also, Google says its new Deep Search capability takes fan-out to the next level, issuing hundreds of searches, reasoning across disparate sources, and generating expert-level, fully cited summaries in minutes.

Mike King calls it a complex matrixed event. If your content only responds to the literal query, it may be completely irrelevant to the full cluster of fan-out queries Google is using to evaluate

4. Content is now routed based on what kind of answer it needs to be

Image source: Search with stateful chat patent

The patent reveals that Google classifies queries into predefined categories, each determining how the system processes your search.

Based on this classification, Google activates specific downstream LLMs, each trained to handle a particular response type.

The second you hit search, Google decides what kind of answer you need, and that decision dictates which AI model is activated to process your query.

This has massive implications for content strategy because content that performs well under one classification may fail completely under another.

For example:

- If a query is classified as needing creative text generation, the system may favor content with unique insight or original thinking.

- A query classified for summarization may favor structured content with clear facts and data points.

The challenge is that this classification happens behind the scenes. SEOs have almost no visibility into how these classifications are made, and that uncertainty makes strategy more complex and dependent on creating content that’s adaptable to how Google frames the user’s need.

5. Google is deciding which sources get cited in AI summaries

Image source: Search with stateful chat patent

This section of the patent outlines how Google “linkifies” portions of Natural Language summaries with links to Search Result Documents (SRDs) that verify them.

Here’s how verification works:

Google converts both the AI-generated statement and candidate source documents into embeddings—mathematical representations of meaning and then uses a distance measure to see how semantically close they are.

Only sources that are mathematically “close enough” to the AI’s summary get cited.

But it goes deeper. Google can create:

- General links to entire documents

- Anchor links that take users directly to the paragraph supporting the claim

This is sentence-level granularity, and you might see underlined text, highlights, or small icons that signal attribution. If multiple sources verify a single claim, each one gets its own clickable icon.

The focus shifts from "here are some websites about your topic" to "here's the answer, and here are the exact sources that prove each part of it."

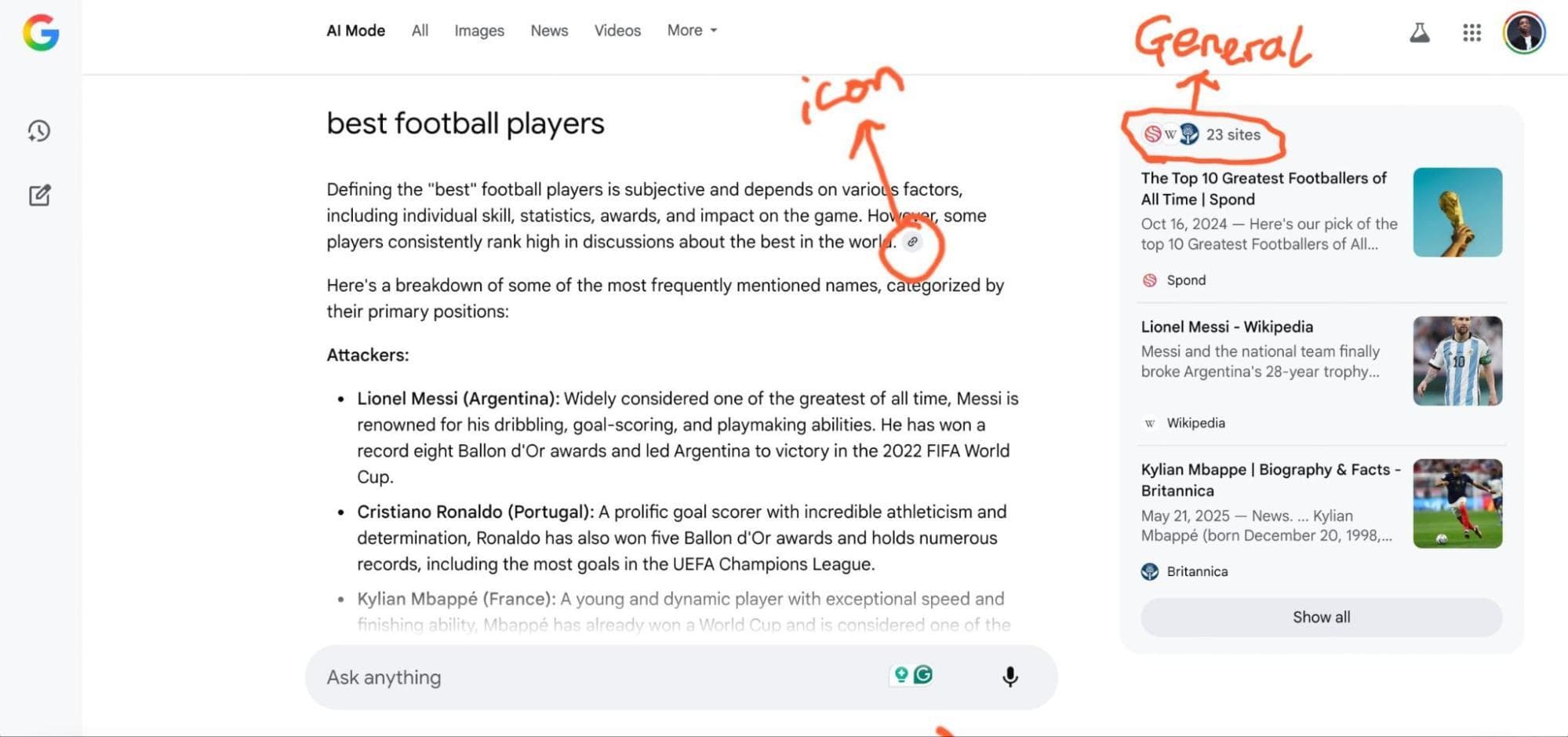

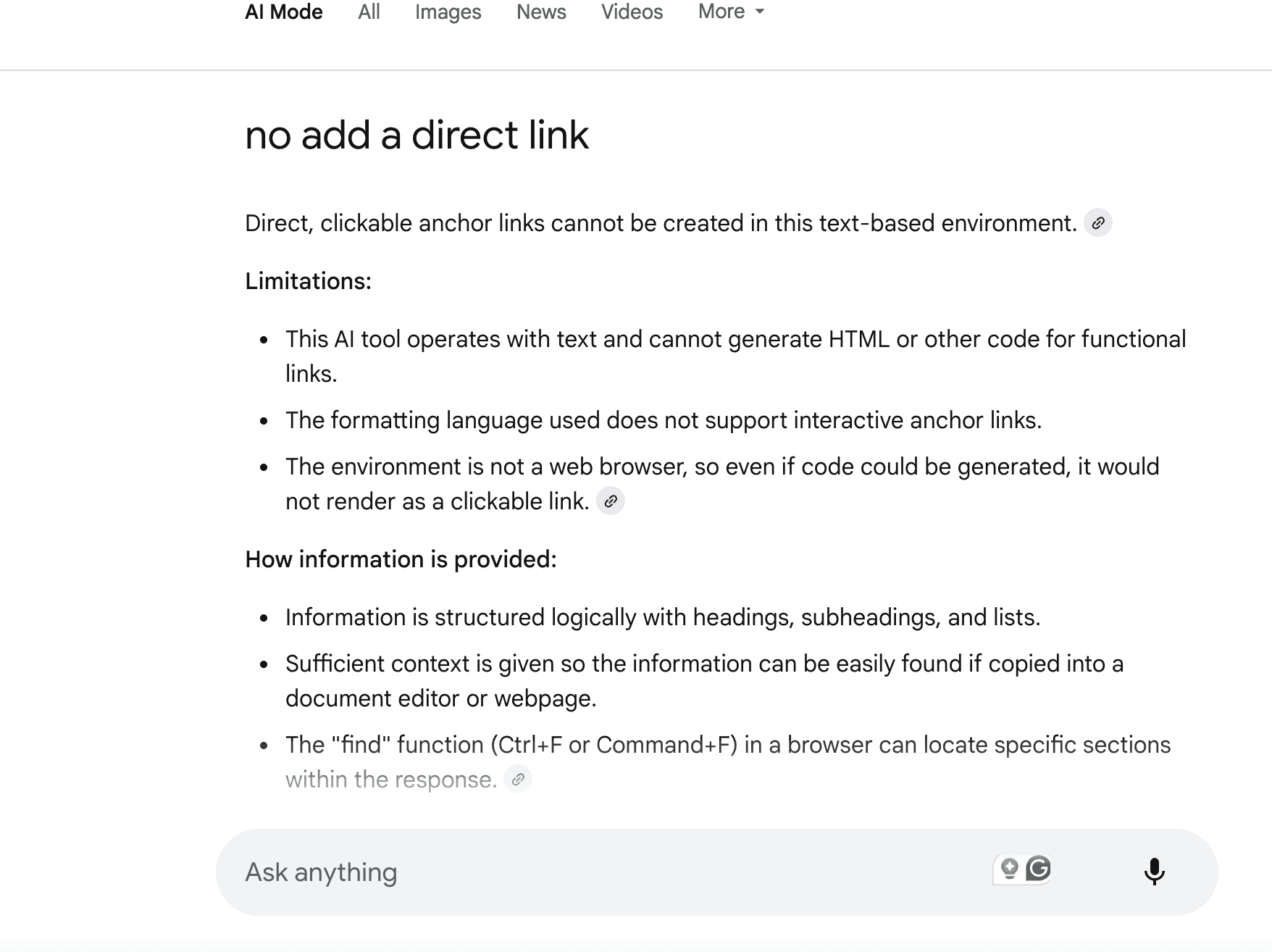

For example, I searched “best football players” in AI Mode.

On the left-hand side, I saw small paperclip icons attached to each sentence. On the right, a sidebar listed sources ranked by semantic similarity to the query.

Clicking those icons didn’t take me to the source, only to the sidebar, and when I asked for anchor links, I got the response below.

Lily Ray unpacked this in her LinkedIn post, “How Google AI Mode Keeps Users in AI Mode.”

AI Mode undermines publishers economically. While attribution technically exists, the experience is built for zero-click consumption.

In fact, an Amsive’s study of 700,000 keywords found an average 15.49% CTR drop, with losses up to 37.04% when combined with featured snippets. Another study by Similarweb showed a 20% drop in clicks when AI Overviews appear.

Publishers invest time and resources to create content that fuels AI search, yet suffer declining clicks, even with citations. Linkification checks the box for attribution, but it’s a weak consolation prize in a zero-click world.

6. Content familiarity affects what users see

Image source: Search with stateful chat patent

Google’s system examines your profile and search history to determine how familiar you are with certain topics before generating a response. In other words, results are increasingly personalized based on your past behavior and demonstrated knowledge.

Two users can type the same query and get completely different answers:

- One might receive a beginner-friendly overview

- Another might get a detailed, technical explanation

The patent describes how the system evaluates a user's familiarity and tailors the response accordingly.

But there’s a deeper concern here.

By personalizing responses so precisely, Google risks reinforcing information bubbles.

It becomes harder to encounter new or conflicting viewpoints, especially when the AI is trained to adapt to your known preferences and beliefs.

This kind of selective exposure could lead to echo chambers, where AI search subtly amplifies existing opinions instead of challenging them. Over time, that personalization may contribute to more polarized information environments, shaped by algorithmic assumptions about what you already believe.

7. Multimodal summaries are a signal you can’t ignore

The patent highlights that Search Result Pages (SRPs) are now being summarized using multimodal LLMs that can process text, images, and video together.

Two articles covering the same topic may compete on more than just textual relevance. For example, a guide on car maintenance featuring diagnostic images, step-by-step visuals, and how-to videos will probably outperform a plain-text guide.

In this article, Moz’s Chima Mmeje gives an example providing a rich content experience that includes text, downloadable assets, video, and illustrations.

Multimodal content offers richer signals that Google’s AI can process and surface more confidently in summaries.

Final thoughts: How can you adjust your strategy to avoid getting left behind as Google rewrites the rules?

These seven mechanisms form the core of Google’s emerging search architecture. For years, SEO operated on a relatively stable formula—match search intent, optimize for keywords, climb the rankings, get traffic.

But this patent reveals a new model:

- AI companions now remember your search journey

- Synthetic queries expand and reinterpret your intent

- Content is routed to specialized models based on query type

- Citations depend on semantic similarity, not just link equity and E-E-A-T

This isn’t a future to prepare for, but one we’re already living in. The real question isn’t if traditional search is being replaced, but how we adapt before it leaves us behind.

In part two of this piece, I dig into how to adjust your content strategy for Google’s AI Mode based on my key takeaways here. Keep an eye out for it!

The author's views are entirely their own (excluding the unlikely event of hypnosis) and may not always reflect the views of Moz.