Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

PDF for link building - avoiding duplicate content

-

Hello,

We've got an article that we're turning into a PDF. Both the article and the PDF will be on our site. This PDF is a good, thorough piece of content on how to choose a product.

We're going to strip out all of the links to our in the article and create this PDF so that it will be good for people to reference and even print. Then we're going to do link building through outreach since people will find the article and PDF useful.

My question is, how do I use rel="canonical" to make sure that the article and PDF aren't duplicate content?

Thanks.

-

Hey Bob

I think you should forget about any kind of perceived conventions and have whatever you think works best for your users and goals.

Again, look at unbounce, that is a custom landing page with a homepage link (to share the love) but not the general site navigation.

They also have a footer to do a bit more link love but really, do what works for you.

Forget conventions - do what works!

Hope that helps

Marcus -

I see, thanks! I think it's important not to have the ecommerce navigation on the page promoting the pdf. What would you say is ideal as far as the graphical and navigation components of the page with the PDF on it - what kind of navigation and graphical header should I have on it?

-

Yep, check the HTTP headers with webbug or there are a bunch of browser plugins that will let you see the headers for the document.

That said, I would push to drive the links to the page though rather than the document itself and just create a nice page that houses the document and make that the link target.

You could even make the PDF link only available by email once they have singed up or some such as canonical is only a directive and you would still be better getting those links flooding into a real page on the site.

You could even offer up some HTML to make this easier for folks to link to that linked to your main page. If you take a look at any savvy infographics etc folks will try to draw a link into a page rather than the image itself for the very same reasons.

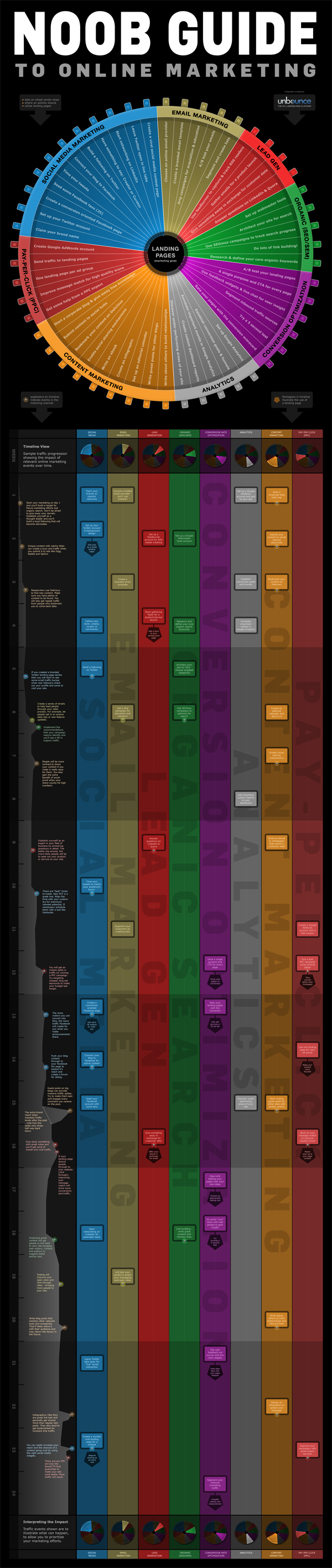

If you look at something like the Noobs Guide to Online Marketing from Unbounce then you will see something like this as the suggested linking code:

[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

[

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)[](<strong>http://unbounce.com/noob-guide-to-online-marketing-infographic/</strong>)

Unbounce – The DIY Landing Page Platform

So, the image is there but the link they are pimping is a standard page:

http://unbounce.com/noob-guide-to-online-marketing-infographic/

They also cheekily add an extra homepage link in as well with some keywords and the brand so if folks don't remove that they still get that benefit.

Ultimately, it means that when links flood into the site they benefit the whole site rather than just promote one PDF.

Just my tuppence!

Marcus -

Thanks for the code Marcus.

Actually, the pdf is what people will be linking to. It's a guide for websites. I think the PDF will be much easier to promote than the article.I assume so anyway.

Is there a way to make sure my canonical code in htaccess is working after I insert the code?

Thanks again,

Bob

-

Hey Bob

There is a much easier way to do this and simply have your PDFs that you don't want indexed in a folder that you block access to in robots.txt. This way you can just drop PDFs into articles and link to them knowing full well these pages will not be indexed.

Assuming you had a PDF called article.pdf in a folder called pdfs/ then the following would prevent indexation.

User-agent: * Disallow: /pdfs/

Or to just block the file itself:

User-agent: *

Disallow: /pdfs/yourfile.pdf Additionally, There is no reason not to add the canonical link as well and if you find people are linking directly to the PDF then having this would ensure that the equity associated with those links was correctly attributed to the parent page (always a good thing).Header add Link '<http: www.url.co.uk="" pdfs="" article.html="">; </http:> rel="canonical"'

Generally, there are better ways to block indexation than with robots.txt but in the case of PDFs, we really don't want these files indexed as they make for such poor landing pages (no navigation) and we certainly want to remove any competition or duplication between the page and the PDF so in this case, it makes for a quick, painless and suitable solution.

Hope that helps!

Marcus -

Thanks ThompsonPaul,

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.html

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.html="">; rel="canonical""</http:>

-

You can insert the canonical header link using your site's .htaccess file, Bob. I'm sure Hostgator provides access to the htaccess file through ftp (sometimes you have to turn on "show hidden files") or through the file manager built into your cPanel.

Check tip #2 in this recent SEOMoz blog article for specifics:

seomoz.org/blog/htaccess-file-snippets-for-seosJust remember too - you will want to do the same kind of on-page optimization for the PDF as you do for regular pages.

- Give it a good, descriptive, keyword-appropriate, dash-separated file name. (essential for usability as well, since it will become the title of the icon when saved to someone's desktop)

- Fill out the metadata for the PDF, especially the Title and Description. In Acrobat it's under File -> Properties -> Description tab (to get the meta-description itself, you'll need to click on the Additional Metadata button)

I'd be tempted to build the links to the html page as much as possible as those will directly help ranking, unlike the PDF's inbound links which will have to pass their link juice through the canonical, assuming you're using it. Plus, the visitor will get a preview of the PDF's content and context from the rest of your site which which may increase trust and engender further engagement..

Your comment about links in the PDF got kind of muddled, but you'll definitely want to make certain there are good links and calls to action back to your website within the PDF - preferably on each page. Otherwise there's no clear "next step" for users reading the PDF back to a purchase on your site. Make sure to put Analytics tracking tags on these links so you can assess the value of traffic generated back from the PDF - otherwise the traffic will just appear as Direct in your Analytics.

Hope that all helps;

Paul

-

Can I just use htaccess?

See here: http://www.seomoz.org/blog/how-to-advanced-relcanonical-http-headers

We only have one pdf like this right now and we plan to have no more than five.

Say the pdf is located at

domain.com/pdfs/white-papers.pdf

and the article that I want to rank is at

domain.com/articles/article.pdf

do I simply add this to my htaccess file?:

Header add Link "<http: www.domain.com="" articles="" article.pdf="">; rel="canonical""</http:>

-

How do I know if I can do an HTTP header request? I'm using shared hosting through hostgator.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

PDF seem to not rank as well as other normal webpages. They still rank do not get me wrong, we have over 100 pdf pages that get traffic for us. The main version is really up to you, what do you want to show in the search results. I think it would be easier to rank for a normal webpage though. If you are doing a rel="canonical" it will pass most of the link juice, not all but most.

-

Thank you DoRM,

I assume that the PDF is what I want to be the main version since that is what I'll be marketing, but I could be wrong? What if I get backlinks to both pages, will both sets of backlinks count?

-

Indicate the canonical version of a URL by responding with the

Link rel="canonical"HTTP header. Addingrel="canonical"to theheadsection of a page is useful for HTML content, but it can't be used for PDFs and other file types indexed by Google Web Search. In these cases you can indicate a canonical URL by responding with theLink rel="canonical"HTTP header, like this (note that to use this option, you'll need to be able to configure your server):Link: <http: www.example.com="" downloads="" white-paper.pdf="">; rel="canonical"</http:>Google currently supports these link header elements for Web Search only.

You can read more her http://support.google.com/webmasters/bin/answer.py?hl=en&answer=139394

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Upper and lower case URLS coming up as duplicate content

Hey guys and gals, I'm having a frustrating time with an issue. Our site has around 10 pages that are coming up as duplicate content/ duplicate title. I'm not sure what I can do to fix this. I was going to attempt to 301 direct the upper case to lower but I'm worried how this will affect our SEO. can anyone offer some insight on what I should be doing? Update: What I'm trying to figure out is what I should do for our URL's. For example, when I run an audit I'm getting two different pages: aaa.com/BusinessAgreement.com and also aaa.com/businessagreement.com. We don't have two pages but for some reason, Google thinks we do.

Intermediate & Advanced SEO | | davidmac1 -

Same content, different languages. Duplicate content issue? | international SEO

Hi, If the "content" is the same, but is written in different languages, will Google see the articles as duplicate content?

Intermediate & Advanced SEO | | chalet

If google won't see it as duplicate content. What is the profit of implementing the alternate lang tag?Kind regards,Jeroen0 -

Directory with Duplicate content? what to do?

Moz keeps finding loads of pages with duplicate content on my website. The problem is its a directory page to different locations. E.g if we were a clothes shop we would be listing our locations: www.sitename.com/locations/london www.sitename.com/locations/rome www.sitename.com/locations/germany The content on these pages is all the same, except for an embedded google map that shows the location of the place. The problem is that google thinks all these pages are duplicated content. Should i set a canonical link on every single page saying that www.sitename.com/locations/london is the main page? I don't know if i can use canonical links because the page content isn't identical because of the embedded map. Help would be appreciated. Thanks.

Intermediate & Advanced SEO | | nchlondon0 -

Case Sensitive URLs, Duplicate Content & Link Rel Canonical

I have a site where URLs are case sensitive. In some cases the lowercase URL is being indexed and in others the mixed case URL is being indexed. This is leading to duplicate content issues on the site. The site is using link rel canonical to specify a preferred URL in some cases however there is no consistency whether the URLs are lowercase or mixed case. On some pages the link rel canonical tag points to the lowercase URL, on others it points to the mixed case URL. Ideally I'd like to update all link rel canonical tags and internal links throughout the site to use the lowercase URL however I'm apprehensive! My question is as follows: If I where to specify the lowercase URL across the site in addition to updating internal links to use lowercase URLs, could this have a negative impact where the mixed case URL is the one currently indexed? Hope this makes sense! Dave

Intermediate & Advanced SEO | | allianzireland0 -

H3 Tags - Should I Link to my content Articles- ? And do I have to many H3 tags/ Links as it is ?

Hello All, On my ecommerce landing pages, I currently have links to my products as H3 Tags. I also have useful guides displayed on the page with links useful articles we have written (they currently go to my news section). I am wondering if I should put those article links as additional H3 tags as well for added seo benefit or do I have to many tags as it is ?. A link to my Landing Page I am talking about is - http://goo.gl/h838RW Screenshot of my h1-h6 tags - http://imgur.com/hLtX0n7 I enclose screenshot my guides and also of my H1-H6 tags. Any advice would be greatly appreciated. thanks Peter

Intermediate & Advanced SEO | | PeteC120 -

How to Remove Joomla Canonical and Duplicate Page Content

I've attempted to follow advice from the Q&A section. Currently on the site www.cherrycreekspine.com, I've edited the .htaccess file to help with 301s - all pages redirect to www.cherrycreekspine.com. Secondly, I'd added the canonical statement in the header of the web pages. I have cut the Duplicate Page Content in half ... now I have a remaining 40 pages to fix up. This is my practice site to try and understand what SEOmoz can do for me. I've looked at some of your videos on Youtube ... I feel like I'm scrambling around to the Q&A and the internet to understand this product. I'm reading the beginners guide.... any other resources would be helpful.

Intermediate & Advanced SEO | | deskstudio0 -

Capitals in url creates duplicate content?

Hey Guys, I had a quick look around however I couldn't find a specific answer to this. Currently, the SEOmoz tools come back and show a heap of duplicate content on my site. And there's a fair bit of it. However, a heap of those errors are relating to random capitals in the urls. for example. "www.website.com.au/Home/information/Stuff" is being treated as duplicate content of "www.website.com.au/home/information/stuff" (Note the difference in capitals). Anyone have any recommendations as to how to fix this server side(keeping in mind it's not practical or possible to fix all of these links) or to tell Google to ignore the capitalisation? Any help is greatly appreciated. LM.

Intermediate & Advanced SEO | | CarlS0 -

Are duplicate links on same page alright?

If I have a homepage with category links, is it alright for those category links to appear in the footer as well, or should you never have duplicate links on one page? Can you please give a reason why as well? Thanks!

Intermediate & Advanced SEO | | dkamen0