Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

How to index e-commerce marketplace product pages

-

Hello!

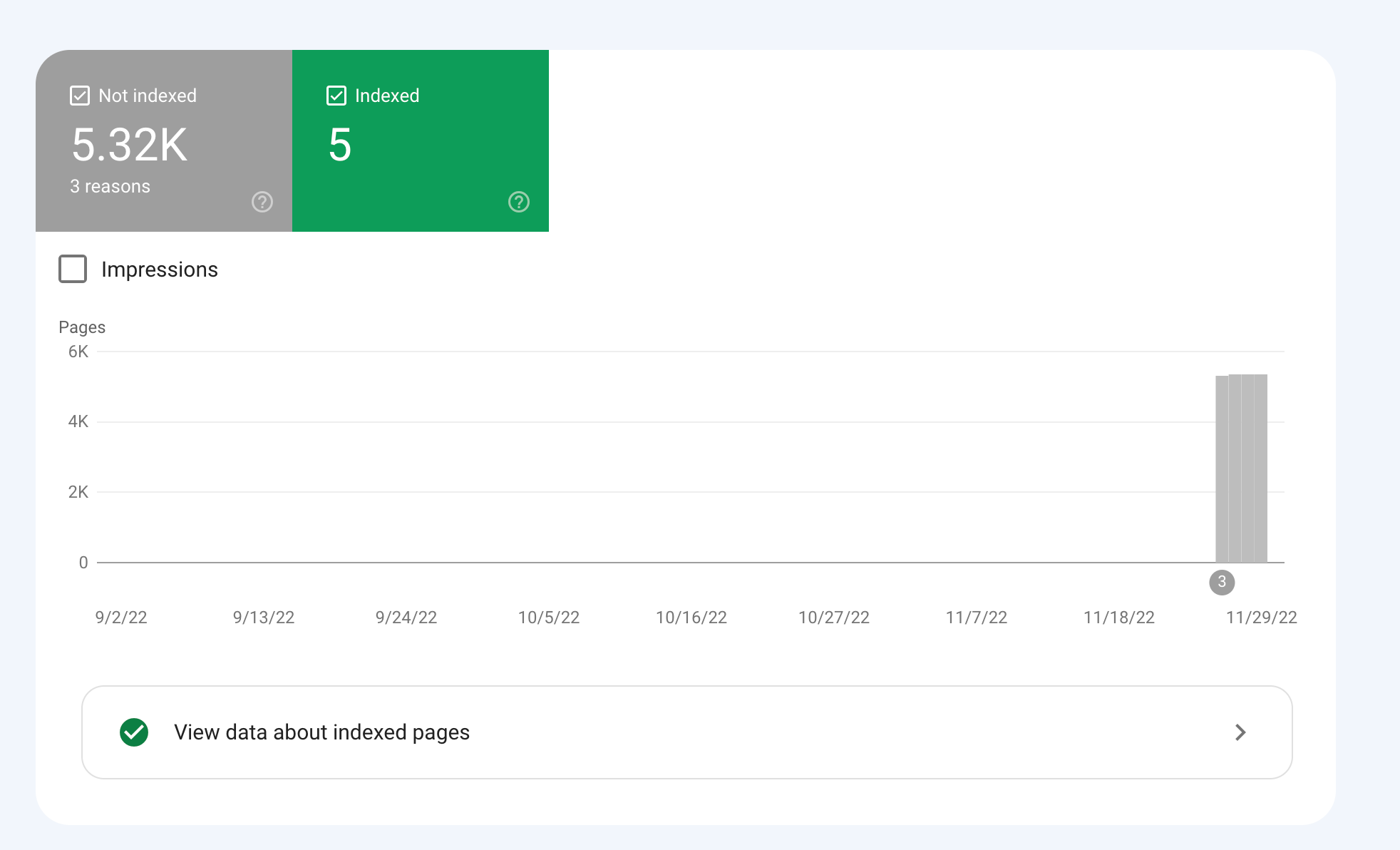

We are an online marketplace that submitted our sitemap through Google Search Console 2 weeks ago. Although the sitemap has been submitted successfully, out of ~10000 links (we have ~10000 product pages), we only have 25 that have been indexed.

I've attached images of the reasons given for not indexing the platform.

How would we go about fixing this?

-

To get your e-commerce marketplace product pages indexed, make sure your pages include unique and descriptive titles, meta descriptions, relevant keywords, and high-quality images. Additionally, optimize your URLs, leverage schema markup, and prioritize user experience for increased search engine visibility.

-

@fbcosta i hve this problem but its so less in my site

پوشاک پاپیون -

I'd appreciate if someone who faced the same indexing issue comes forward and share the case study with fellow members. Pin points steps a sufferer should do to overcome indexing dilemma. What actionable steps to do to enable quick product indexing? How we can get Google's attention so it can start indexing pages at a quick pace? Actionable advice please.

-

There could be several reasons why only 25 out of approximately 10,000 links have been indexed by Google, despite successfully submitting your sitemap through Google Search Console:

Timing: It is not uncommon for indexing to take some time, especially for larger sites with many pages. Although your sitemap has been submitted, it may take several days or even weeks for Google to crawl and index all of your pages. It's worth noting that not all pages on a site may be considered important or relevant enough to be indexed by Google.

Quality of Content: Google may not index pages that it considers low-quality, thin or duplicate content. If a significant number of your product pages have similar or duplicate content, they may not be indexed. To avoid this issue, make sure your product pages have unique, high-quality content that provides value to users.

Technical issues: Your site may have technical issues that are preventing Google from crawling and indexing your pages. These issues could include problems with your site's architecture, duplicate content, or other issues that may impact crawling and indexing.

Inaccurate Sitemap: There is also a possibility that there are errors in the sitemap you submitted to Google. Check the sitemap to ensure that all the URLs are valid, the sitemap is up to date and correctly formatted.

To troubleshoot this issue, you can check your site's coverage report on Google Search Console, which will show you which pages have been indexed and which ones haven't. You can also check your site's crawl report to see if there are any technical issues that may be preventing Google from crawling your pages. Finally, you can also run a site audit to identify and fix any technical issues that may be impacting indexing.

-

@fbcosta As per my experience, if your site is new it will take some time to index all of the URLs, and the second thing is, if you have Hundreds of URLs, it doesn't mean Google will index all of them.

You can try these steps which will help in fast indexing:

- Sharing on Social Media

- Interlinking from already indexed Pages

- Sitemap

- Share the link on the verified Google My Business Profile (Best way to index fast). You can add by-products or create a post and link it to the website.

- Guest post

I am writing here for the first time, I hope it will help

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Best redirect destination for 18k highly-linked pages

Technical SEO question regarding redirects; I appreciate any insights on best way to handle. Situation: We're decommissioning several major content sections on a website, comprising ~18k webpages. This is a well established site (10+ years) and many of the pages within these sections have high-quality inbound links from .orgs and .edus. Challenge: We're trying to determine the best place to redirect these 18k pages. For user experience, we believe best option is the homepage, which has a statement about the changes to the site and links to the most important remaining sections of the site. It's also the most important page on site, so the bolster of 301 redirected links doesn't seem bad. However, someone on our team is concerned that that many new redirected pages and links going to our homepage will trigger a negative SEO flag for the homepage, and recommends instead that they all go to our custom 404 page (which also includes links to important remaining sections). What's the right approach here to preserve remaining SEO value of these soon-to-be-redirected pages without triggering Google penalties?

Technical SEO | | davidvogel1 -

Why MOZ just index some of the links?

hello everyone i've been using moz pro for a while and found a lot of backlink oppertunites as checking my competitor's backlink profile.

Link Building | | seogod123234

i'm doing the same way as my competitors but moz does not see and index lots of them, maybe just index 10% of them. though my backlinks are commenly from sites with +80 and +90 DA like Github, Pinterest, Tripadvisor and .... and the strange point is that 10% are almost from EDU sites with high DA. i go to EDU sites and place a comment and in lots of case, MOZ index them in just 2-3 days!! with maybe just 10 links like this, my DA is incresead from 15 to 19 in less than one month! so, how does this "SEO TOOL" work?? is there anyway to force it to crawl a page?0 -

GoogleBot still crawling HTTP/1.1 years after website moved to HTTP/2

Whole website moved to https://www. HTTP/2 version 3 years ago. When we review log files, it is clear that - for the home page - GoogleBot continues to only access via HTTP/1.1 protocol Robots file is correct (simply allowing all and referring to https://www. sitemap Sitemap is referencing https://www. pages including homepage Hosting provider has confirmed server is correctly configured to support HTTP/2 and provided evidence of accessing via HTTP/2 working 301 redirects set up for non-secure and non-www versions of website all to https://www. version Not using a CDN or proxy GSC reports home page as correctly indexed (with https://www. version canonicalised) but does still have the non-secure version of website as the referring page in the Discovery section. GSC also reports homepage as being crawled every day or so. Totally understand it can take time to update index, but we are at a complete loss to understand why GoogleBot continues to only go through HTTP/1.1 version not 2 Possibly related issue - and of course what is causing concern - is that new pages of site seem to index and perform well in SERP ... except home page. This never makes it to page 1 (other than for brand name) despite rating multiples higher in terms of content, speed etc than other pages which still get indexed in preference to home page. Any thoughts, further tests, ideas, direction or anything will be much appreciated!

Technical SEO | | AKCAC1 -

Collections or blog posts for Shopify ecommerce seo?

Hi, hope you guys can help as I am going down a rabbit hole with this one! We have a solid-ranking sports nutrition site and are building a new SEO keyword strategy on our Shopify built store. We are using collections (categories) for much of the key product-based seo. This is because, as we understand it, Google prioritises collection/category pages over product pages. Should we then build additional collection pages to rank for secondary product search terms that could fit a collection page structure (eg 'vegan sports nutrition'), or should we use blog posts to do this? We have a quality blog with good unique content and reasonable domain authority so both options are open to us. But while the collection/category option may be best for SEO, too many collections/categories could upset our UX. We have a very small product range (10 products) so want to keep navigation fast and easy. Our 7 lead keyword collection pages do this already. More run the risk of upsetting ease/speed of site navigation. On the other hand, conversion rate from collection pages is historically much better than blog pages. We have made major technical upgrades to the blog to improve this but these are yet to be tested in anger. So at the heart of it all - do you guys recommend favouring blog posts or collection/category pages for secondary high sales intent keywords? All help gratefully received - thanks!

SEO Tactics | | WP332 -

Removed Product page on our website, what to do

We just removed an entire product category on our website, (product pages still exist, but will be removed soon as well) Should we be setting up re-directs, or can we simply delete this category and product

Technical SEO | | DutchG

pages and do nothing? We just received this in Google Webmasters tools: Google detected a significant increase in the number of URLs that return a 404 (Page Not Found) error. We have not updated the sitemap yet...Would this be enough to do or should we do more? You can view our website here: http://tinyurl.com/6la8 We removed the entire "Spring Planted Category"0 -

Duplicate content on Product pages for different product variations.

I have multiple colors of the same product, but as a result I'm getting duplicate content warnings. I want to keep these all different products with their own pages, so that the color can be easily identified by browsing the category page. Any suggestions?

Technical SEO | | bobjohn10 -

Splitting Page Authority with two URLs for the same page.

Hello guys, My website is currently holding two different URLs for the same page and I am under the impression such set up is dividing my Page Authority and Link Juice. We currently have the following page with both URLs below: www.wbresearch.com/soldiertechnologyusa/home.aspx

Technical SEO | | JoaoPdaCosta-WBR

www.wbresearch.com/soldiertechnologyusa/ Analysing the page authority and backlinks I identified that we are splitting the amount of backlinks (links from sites, social media and therefore authority). "/home.aspx"

PA: 67

Linking Root Domains: 52

Total Links: 272 "/"

PA: 64

Linking Root Domains: 29

Total Links: 128 I am under the impression that if the URLs were the same we would maximise our backlinks and therefore page authority. My Question: How can I fix this? Should I have a 301 redirect from the page "/" to the "/home.aspx" therefore passing the authority and link juice of “/” directly to “/homes.aspx”? Trying to gather thoughts and ideas on this, suggestions are much appreciated? Thanks!0 -

How to block "print" pages from indexing

I have a fairly large FAQ section and every article has a "print" button. Unfortunately, this is creating a page for every article which is muddying up the index - especially on my own site using Google Custom Search. Can you recommend a way to block this from happening? Example Article: http://www.knottyboy.com/lore/idx.php/11/183/Maintenance-of-Mature-Locks-6-months-/article/How-do-I-get-sand-out-of-my-dreads.html Example "Print" page: http://www.knottyboy.com/lore/article.php?id=052&action=print

Technical SEO | | dreadmichael0