Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

Is it good practice to still pay for Best of the Web Directory (BOTW) and other similar one's you have to pay for?

-

I know that paid for links are hit by Google, but in the past these directories were okay. What about now?

Thank you.

-

"If you pay for a directory link only to gain position in the SERPs you will most probably not get any ROI"

Meaning if you are on the first page there won`t be ROI? I dont think so!

-

There are exceptions to the "paid for" link rule and I believe this site is one of them as they qualify there links! However whether you'll get ROI from it is another question!

-

Hi,

Well in general I agree with Arjan, I am strongly of the opinion that some directories are still VERY worthwhile, and BOTW is one of them. When you are launching a brand new site, you just aren't going to have a lot of attention and inbound lin opportunities at first. Submitting your site to DMOZ (which is free), Yahoo.dir (Paid), Business.com (Paid), JoeAnt.com (Paid) and yes, BOTW (Paid) are all most definitely worthwhile. IMHO they send a signal to the search engines that you are a legitimate business. That's a very important message to send when you are a startup.

In addition to those I think there are some others that are worth it as well, depending on your particular business, particularly localized directories and directories targeting certain niche markets.

Hope that's helpful!

Dana

-

My advice will be to only pay for directory links that drive enough traffic to your website directly to get a proper ROI.

If you pay for a directory link only to gain position in the SERPs you will most probably not get any ROI.

If you get the link from a directory Google does not like because it is known to be selling links it could even hurt your rankings.In short: Paid directory links are not good for SEO.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

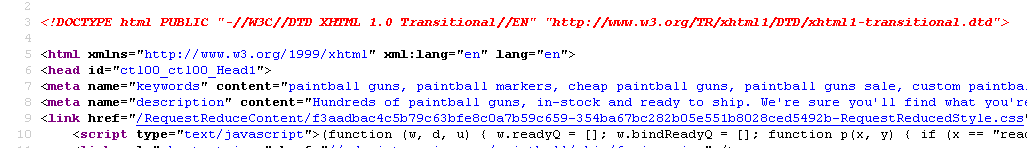

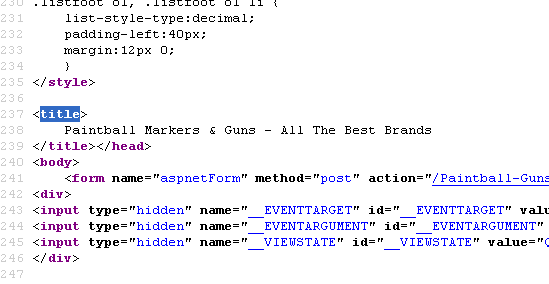

Does Title Tag location in a page's source code matter?

Currently our meta description is on line 8 for our page - http://www.paintball-online.com/Paintball-Guns-And-Markers-0Y.aspx

Technical SEO | | Istoresinc The title tag, however sits below a bunch of code on line 237

The title tag, however sits below a bunch of code on line 237

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0

Does the location of the title tag, meta tags, and any structured data have any influence with respect to SEO and search engines? Put another way, could we benefit from moving the title tag up to the top?

I "surfed 'n surfed" and could not find any articles about this.

I would really appreciate any help on this as our site got decimated organically last May and we are looking for any help with SEO.

NIck

0 -

Disallow: /404/ - Best Practice?

Hello Moz Community, My developer has added this to my robots.txt file: Disallow: /404/ Is this considered good practice in the world of SEO? Would you do it with your clients? I feel he has great development knowledge but isn't too well versed in SEO. Thank you in advanced, Nico.

Technical SEO | | niconico1011 -

Is there a way for me to automatically download a website's sitemap.xml every month?

From now on we want to store all our sitemap.xml over the next years. Its a nice archive to have that allows us to analyse how many pages we have on our website and which ones were removed/redirected. Any suggestions? Thanks

Technical SEO | | DeptAgency0 -

Best way to handle pages with iframes that I don't want indexed? Noindex in the header?

I am doing a bit of SEO work for a friend, and the situation is the following: The site is a place to discuss articles on the web. When clicking on a link that has been posted, it sends the user to a URL on the main site that is URL.com/article/view. This page has a large iframe that contains the article itself, and a small bar at the top containing the article with various links to get back to the original site. I'd like to make sure that the comment pages (URL.com/article) are indexed instead of all of the URL.com/article/view pages, which won't really do much for SEO. However, all of these pages are indexed. What would be the best approach to make sure the iframe pages aren't indexed? My intuition is to just have a "noindex" in the header of those pages, and just make sure that the conversation pages themselves are properly linked throughout the site, so that they get indexed properly. Does this seem right? Thanks for the help...

Technical SEO | | jim_shook0 -

Ecommerce website: Product page setup & SKU's

I manage an E-commerce website and we are looking to make some changes to our product pages to try and optimise them for search purposes and to try and improve the customer buying experience. This is where my head starts to hurt! Now, let's say I am selling a T shirt that comes in 4 sizes and 6 different colours. At the moment my website would have 24 products, each with pretty much the same content (maybe differing references to the colour & size). My idea is to change this and have 1 main product page for the T-shirt, but to have 24 product SKU's/variations that exist to give the exact product details. Some different ways I have been considering to do this: a) have drop-down fields on the product page that ask the customer to select their Tshirt size and colour. The image & price then changes on the page. b) All product 24 product SKUs sre listed under the main product with the 'Add to Cart' open next to each one. Each one would be clickable so a page it its own right. Would I need to set up a canonical links for each SKU that point to the top level product page? I'm obviously looking to minimise duplicate content but Im not exactly sure on how to set this up - its a big decision so I need to be 100% clear before signing off on anything. . Any other tips on how to do this or examples of good e-commerce websites that use product SKus well? Kind regards Tom

Technical SEO | | DHS_SH0 -

Best Practices for adding Dynamic URL's to XML Sitemap

Hi Guys, I'm working on an ecommerce website with all the product pages using dynamic URL's (we also have a few static pages but there is no issue with them). The products are updated on the site every couple of hours (because we sell out or the special offer expires) and as a result I keep seeing heaps of 404 errors in Google Webmaster tools and am trying to avoid this (if possible). I have already created an XML sitemap for the static pages and am now looking at incorporating the dynamic product pages but am not sure what is the best approach. The URL structure for the products are as follows: http://www.xyz.com/products/product1-is-really-cool

Technical SEO | | seekjobs

http://www.xyz.com/products/product2-is-even-cooler

http://www.xyz.com/products/product3-is-the-coolest Here are 2 approaches I was considering: 1. To just include the dynamic product URLS within the same sitemap as the static URLs using just the following http://www.xyz.com/products/ - This is so spiders have access to the folder the products are in and I don't have to create an automated sitemap for all product OR 2. Create a separate automated sitemap that updates when ever a product is updated and include the change frequency to be hourly - This is so spiders always have as close to be up to date sitemap when they crawl the sitemap I look forward to hearing your thoughts, opinions, suggestions and/or previous experiences with this. Thanks heaps, LW0 -

Found a Typo in URL, what's the best practice to fix it?

Wordpress 3.4, Yoast, Multisite The URL is supposed to be "www.myexample.com/great-site" but I just found that it's "www.myexample.com/gre-atsite" It is a relatively new site but we already pointed several internal links to "www.myexample.com/gre-atsite" What's the best practice to correct this? Which option is more desirable? 1.Creating a new page I found that Yoast has "301 redirect" option in the Advanced tap Can I just create a new page(exact same page) and put noindex, nofollow and redirect it to http://www.myexample.com/great-site OR 2. htacess redirect rule simply change the URL to http://www.myexample.com/great-site and update it, and add Options +FollowSymLinks RewriteEngine On

Technical SEO | | joony2008

RewriteCond %{HTTP_HOST} ^http://www.myexample.com/gre-atsite$ [NC]

RewriteRule ^(.*)$ http://www.myexample.com/great-site$1 [R=301,L]0 -

Should we use Google's crawl delay setting?

We’ve been noticing a huge uptick in Google’s spidering lately, and along with it a notable worsening of render times. Yesterday, for example, Google spidered our site at a rate of 30:1 (google spider vs. organic traffic.) So in other words, for every organic page request, Google hits the site 30 times. Our render times have lengthened to an avg. of 2 seconds (and up to 2.5 seconds). Before this renewed interest Google has taken in us we were seeing closer to one second average render times, and often half of that. A year ago, the ratio of Spider to Organic was between 6:1 and 10:1. Is requesting a crawl-delay from Googlebot a viable option? Our goal would be only to reduce Googlebot traffic, and hopefully improve render times and organic traffic. Thanks, Trisha

Technical SEO | | lzhao0