Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

How to index e-commerce marketplace product pages

-

Hello!

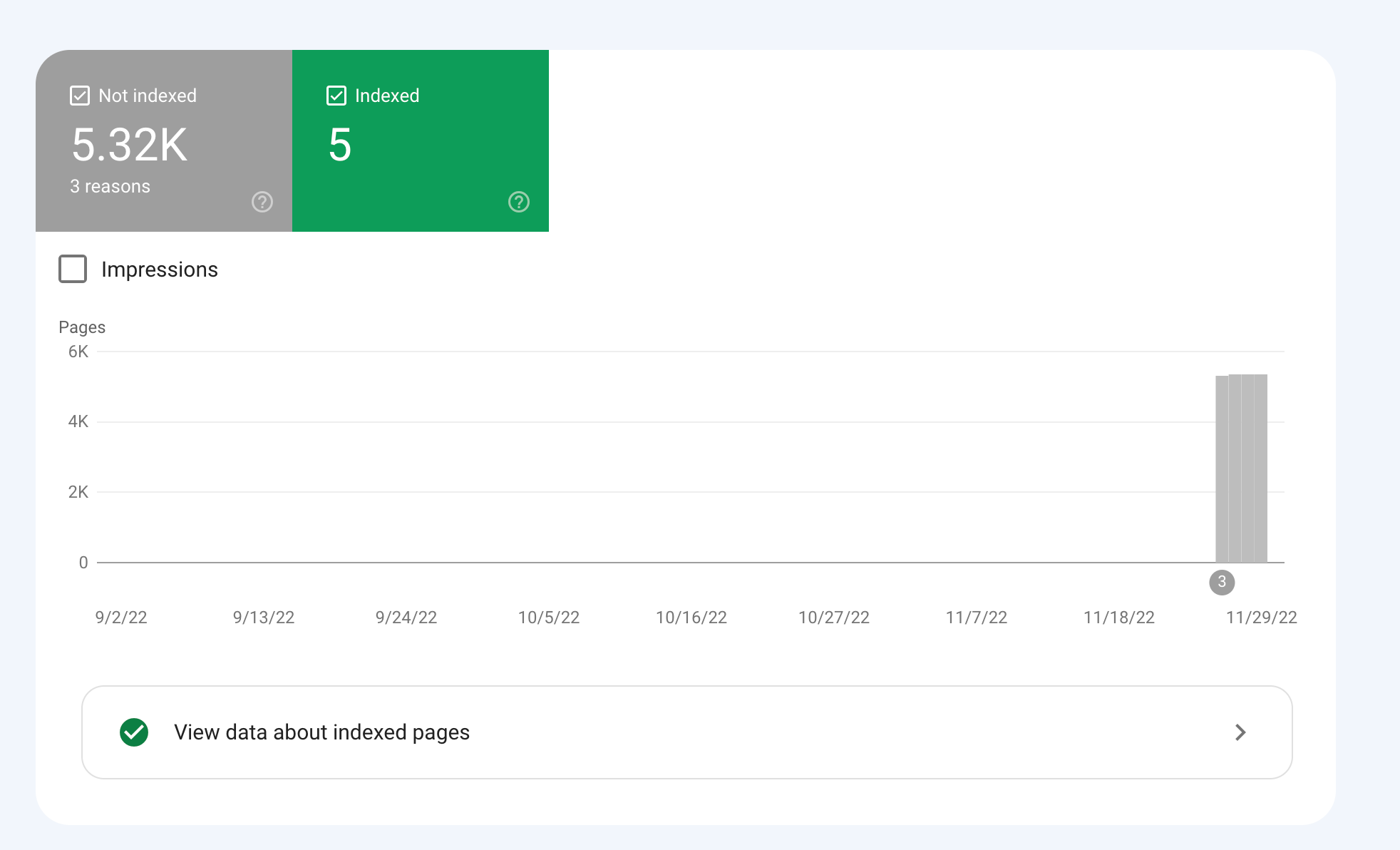

We are an online marketplace that submitted our sitemap through Google Search Console 2 weeks ago. Although the sitemap has been submitted successfully, out of ~10000 links (we have ~10000 product pages), we only have 25 that have been indexed.

I've attached images of the reasons given for not indexing the platform.

How would we go about fixing this?

-

To get your e-commerce marketplace product pages indexed, make sure your pages include unique and descriptive titles, meta descriptions, relevant keywords, and high-quality images. Additionally, optimize your URLs, leverage schema markup, and prioritize user experience for increased search engine visibility.

-

@fbcosta i hve this problem but its so less in my site

پوشاک پاپیون -

I'd appreciate if someone who faced the same indexing issue comes forward and share the case study with fellow members. Pin points steps a sufferer should do to overcome indexing dilemma. What actionable steps to do to enable quick product indexing? How we can get Google's attention so it can start indexing pages at a quick pace? Actionable advice please.

-

There could be several reasons why only 25 out of approximately 10,000 links have been indexed by Google, despite successfully submitting your sitemap through Google Search Console:

Timing: It is not uncommon for indexing to take some time, especially for larger sites with many pages. Although your sitemap has been submitted, it may take several days or even weeks for Google to crawl and index all of your pages. It's worth noting that not all pages on a site may be considered important or relevant enough to be indexed by Google.

Quality of Content: Google may not index pages that it considers low-quality, thin or duplicate content. If a significant number of your product pages have similar or duplicate content, they may not be indexed. To avoid this issue, make sure your product pages have unique, high-quality content that provides value to users.

Technical issues: Your site may have technical issues that are preventing Google from crawling and indexing your pages. These issues could include problems with your site's architecture, duplicate content, or other issues that may impact crawling and indexing.

Inaccurate Sitemap: There is also a possibility that there are errors in the sitemap you submitted to Google. Check the sitemap to ensure that all the URLs are valid, the sitemap is up to date and correctly formatted.

To troubleshoot this issue, you can check your site's coverage report on Google Search Console, which will show you which pages have been indexed and which ones haven't. You can also check your site's crawl report to see if there are any technical issues that may be preventing Google from crawling your pages. Finally, you can also run a site audit to identify and fix any technical issues that may be impacting indexing.

-

@fbcosta As per my experience, if your site is new it will take some time to index all of the URLs, and the second thing is, if you have Hundreds of URLs, it doesn't mean Google will index all of them.

You can try these steps which will help in fast indexing:

- Sharing on Social Media

- Interlinking from already indexed Pages

- Sitemap

- Share the link on the verified Google My Business Profile (Best way to index fast). You can add by-products or create a post and link it to the website.

- Guest post

I am writing here for the first time, I hope it will help

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Why MOZ just index some of the links?

hello everyone i've been using moz pro for a while and found a lot of backlink oppertunites as checking my competitor's backlink profile.

Link Building | | seogod123234

i'm doing the same way as my competitors but moz does not see and index lots of them, maybe just index 10% of them. though my backlinks are commenly from sites with +80 and +90 DA like Github, Pinterest, Tripadvisor and .... and the strange point is that 10% are almost from EDU sites with high DA. i go to EDU sites and place a comment and in lots of case, MOZ index them in just 2-3 days!! with maybe just 10 links like this, my DA is incresead from 15 to 19 in less than one month! so, how does this "SEO TOOL" work?? is there anyway to force it to crawl a page?0 -

Dynamic Canonical Tag for Search Results Filtering Page

Hi everyone, I run a website in the travel industry where most users land on a location page (e.g. domain.com/product/location, before performing a search by selecting dates and times. This then takes them to a pre filtered dynamic search results page with options for their selected location on a separate URL (e.g. /book/results). The /book/results page can only be accessed on our website by performing a search, and URL's with search parameters from this page have never been indexed in the past. We work with some large partners who use our booking engine who have recently started linking to these pre filtered search results pages. This is not being done on a large scale and at present we only have a couple of hundred of these search results pages indexed. I could easily add a noindex or self-referencing canonical tag to the /book/results page to remove them, however it’s been suggested that adding a dynamic canonical tag to our pre filtered results pages pointing to the location page (based on the location information in the query string) could be beneficial for the SEO of our location pages. This makes sense as the partner websites that link to our /book/results page are very high authority and any way that this could be passed to our location pages (which are our most important in terms of rankings) sounds good, however I have a couple of concerns. • Is using a dynamic canonical tag in this way considered spammy / manipulative? • Whilst all the content that appears on the pre filtered /book/results page is present on the static location page where the search initiates and which the canonical tag would point to, it is presented differently and there is a lot more content on the static location page that isn’t present on the /book/results page. Is this likely to see the canonical tag being ignored / link equity not being passed as hoped, and are there greater risks to this that I should be worried about? I can’t find many examples of other sites where this has been implemented but the closest would probably be booking.com. https://www.booking.com/searchresults.it.html?label=gen173nr-1FCAEoggI46AdIM1gEaFCIAQGYARS4ARfIAQzYAQHoAQH4AQuIAgGoAgO4ArajrpcGwAIB0gIkYmUxYjNlZWMtYWQzMi00NWJmLTk5NTItNzY1MzljZTVhOTk02AIG4AIB&sid=d4030ebf4f04bb7ddcb2b04d1bade521&dest_id=-2601889&dest_type=city& Canonical points to https://www.booking.com/city/gb/london.it.html In our scenario however there is a greater difference between the content on both pages (and booking.com have a load of search results pages indexed which is not what we’re looking for) Would be great to get any feedback on this before I rule it out. Thanks!

Technical SEO | | GAnalytics1 -

Need some help understanding SEO - Please help before I lose [pull out] all my hair

I'm new to SEO, and am stubbornly trying to educate myself. I have a telescope shop in Canada, it's a small business that we run on the side. We're driving lots of traffic through FB and our outreach programs but I really want to increase our presence on search. We released a new website back in January and it killed some of our rankings. We're working our way back with a very specific set of efforts on regular SEO: Metadata and titles, although it seems that's not super relevant Building high quality backlinks and eliminating any spammy backlinks Rewriting product listings so that they are original content though I'm not sure how important this is in e-commerce Writing high quality articles and blog posts Working relevant keywords into our product pages and titles I understand that good SEO is about pushing on all the levers, and trying to make sure that your site is as valuable to the end user as possible. We're making some good progress, but I'm puzzled by the #1 shop in Canada. They don't put any apparent effort into SEO and they still rank #1 on every key product we compete with them on. I've worked with two separate, highly ranked and regarded SEO firms on this and neither has been able to tell my why this other site ranks so highly. Here's a specific example on a popular product that we both sell, the Celestron NexStar 8SE. Here’s the link to Telescope Canada’s page for their Celestron 8SE: https://telescopescanada.ca/products/celestron-nexstar-8se-computerized-telescope-11069 Here’s a link to the Celestron 8SE page from the manufacturer website: https://www.celestron.com/products/nexstar-8se-computerized-telescope Telescopes Canada has just copied and pasted. There is no original content aside from adding the shipping and return policy to the tab, and having some options for selecting accessories on the page. Here is our page: https://all-startelescope.com/products/celestron-nexstar-8se We have higher page authority, higher domain authority, and they keyword analyzer in moz says that our page is higher quality than the Telescopes Canada page. I can’t find a single metric on any tool (ubbersuggest, Moz, ahrefs, semrush) that says Telescopes Canada is a better site, or has a better NexStar 8SE product page. But they keep ranking ahead of us, and right at the top of google search. Our titles are good, our metadata is good (but I don’t think that’s been a serious ranking factor for about ten years). Our text is original, it’s relevant, we have healthy internal links to the page. According to Moz's page ranker it's 20 points higher than Telescope Canada's page. We have invensted in some excellent blog content, we’re adding new products to the website so that we rank for more keywords. All of those things are helping, but I fundamentally don’t understand why Telescopes Canada is #1 almost across the board on every key product in our market. There is something that I’m not seeing here. Can you see any metric, any tool in your toolbox that indicates why they rank at the top, or even higher than we do for in these search terms specific to that product: Celestron NexStar 8SE

Intermediate & Advanced SEO | | nkennett

NexStar 8SE

Celestron NexStar 8SE Canada

NexStar 8SE Canada I have a feeling it's something technical that I'm missing, but I'm not sure how obvious it is with two 'professional' firms not finding it. I'd really appreciate any help or insight that you can offer.0 -

Keywords are indexed on the home page

Hello everyone, For one of our websites, we have optimized for many keywords. However, it seems that every keyword is indexed on the home page, and thus not ranked properly. This occurs only on one of our many websites. I am wondering if anyone knows the cause of this issue, and how to solve it. Thank you.

Technical SEO | | Ginovdw1 -

Japanese URL-structured sitemap (pages) not being indexed by Bing Webmaster Tools

Hello everyone, I am facing an issue with the sitemap submission feature in Bing Webmaster Tools for a Japanese language subdirectory domain project. Just to outline the key points: The website is based on a subdirectory URL ( example.com/ja/ ) The Japanese URLs (when pages are published in WordPress) are not being encoded. They are entered in pure Kanji. Google Webmaster Tools, for instance, has no issues reading and indexing the page's URLs in its sitemap submission area (all pages are being indexed). When it comes to Bing Webmaster Tools it's a different story, though. Basically, after the sitemap has been submitted ( example.com/ja/sitemap.xml ), it does report an error that it failed to download this part of the sitemap: "page-sitemap.xml" (basically the sitemap featuring all the sites pages). That means that no URLs have been submitted to Bing either. My apprehension is that Bing Webmaster Tools does not understand the Japanese URLs (or the Kanji for that matter). Therefore, I generally wonder what the correct way is to go on about this. When viewing the sitemap ( example.com/ja/page-sitemap.xml ) in a web browser, though, the Japanese URL's characters are already displayed as encoded. I am not sure if submitting the Kanji style URLs separately is a solution. In Bing Webmaster Tools this can only be done on the root domain level ( example.com ). However, surely there must be a way to make Bing's sitemap submission understand Japanese style sitemaps? Many thanks everyone for any advice!

Technical SEO | | Hermski0 -

Investigating a huge spike in indexed pages

I've noticed an enormous spike in pages indexed through WMT in the last week. Now I know WMT can be a bit (OK, a lot) off base in its reporting but this was pretty hard to explain. See, we're in the middle of a huge campaign against dupe content and we've put a number of measures in place to fight it. For example: Implemented a strong canonicalization effort NOINDEX'd content we know to be duplicate programatically Are currently fixing true duplicate content issues through rewriting titles, desc etc. So I was pretty surprised to see the blow-up. Any ideas as to what else might cause such a counter intuitive trend? Has anyone else see Google do something that suddenly gloms onto a bunch of phantom pages?

Technical SEO | | farbeseo0 -

Google counting numbers of products on category pages - what about pagination ?

Hi there, Whilst checking out the SERPS, as you do, I noticed that where our category page appears, google now seems to be counting the number of products (what it calls items) on the product page and displaying this in the 1st part of the description (see image attached). My problem is we employ pagination, so that our category page will have 15 items on it, then there are paginated results for the rest, with either ?page=2 or page-2/ etc. appended to the URL. Although this is only a minor issue, I was just wondering if there was a way to change the number of products displayed on that page to be the entire number of products in that category, is there a microformat markup or something that can over-ride what google has detected ? Furthermore is this system of pagination effective ? I have considered using javascript pagination, such that all products would be loaded on to the one page but hidden until 'paginated', but I was worried about having hidden elements on the page, and also the impact of load times. Although I think this may solve the problem and display the true number of products in a section! Any help much appreciated, Stuart b4urme.jpg

Technical SEO | | stukerr0