Moz Q&A is closed.

After more than 13 years, and tens of thousands of questions, Moz Q&A closed on 12th December 2024. Whilst we’re not completely removing the content - many posts will still be possible to view - we have locked both new posts and new replies. More details here.

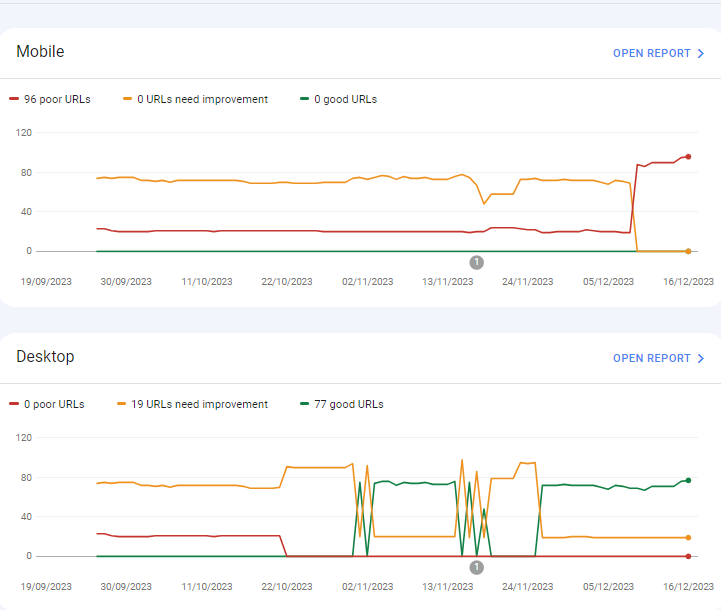

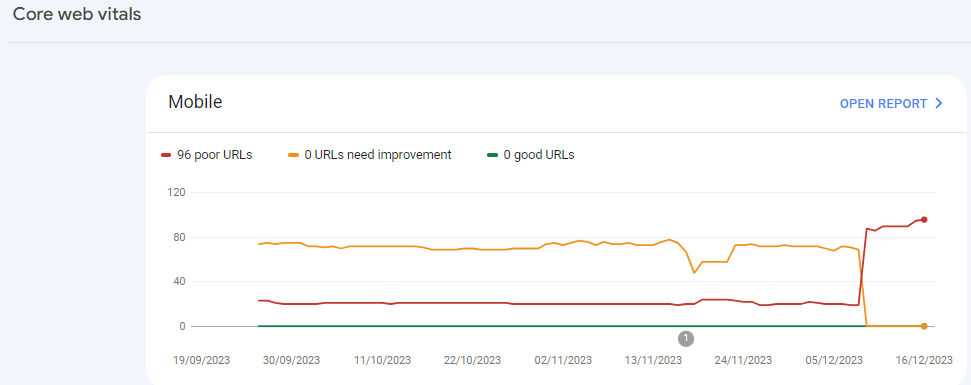

Sudden Drop in Mobile Core Web Vitals

-

For some reason, after all URLs being previously classified as Good, our Mobile Web Vitals report suddenly shifted to the above, and it doesn't correspond with any site changes on our end.

Has anyone else experience something similar or have any idea what might have caused such a shift?

Curiously I'm not seeing a drop in session duration, conversion rate etc. for mobile traffic despite the seemingly sudden change.

-

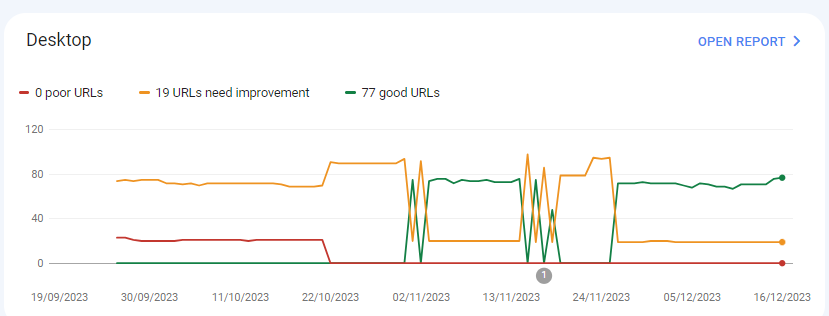

I can’t understand their algorithm for core web vitals. I have made some technical updates to our website for speed optimization, but the thing that happened in the search console is very confusing for my site.

For desktops, pages are indexed as good URLs

while mobile-indexed URLs are displayed as poor URLs.

Our website is the collective material for people looking for Canada immigration (PAIC), and 70% of the portion is filled with text only. We are using webp images for optimization, still it is not passing Core Web Vitals.I am looking forward to the expert’s suggestion to overcome this problem.

-

I can’t understand their algorithm for core web vitals. I have made some technical updates to our website for speed optimization, but the thing that happened in the search console is very confusing for my site.

For desktops, pages are indexed as good URLs

while mobile-indexed URLs are displayed as poor URLs.

Our website is the collective material for people looking for Canadian immigration (PAIC), and 70% of the portion is filled with text only. We are using webp images for optimization, still it is not passing Core Web Vitals.I am looking forward to the expert’s suggestion to overcome this problem.

-

@rwat Hi, did you find a solution?

-

Yes, I am also experiencing the same for one of my websites, but most of them are blog posts and I am using a lot of images without proper optimization, so that could be the reason. but not sure.

It is also quite possible that Google maybe adding some more parameters to their main web critical score.

Browse Questions

Explore more categories

-

Moz Tools

Chat with the community about the Moz tools.

-

SEO Tactics

Discuss the SEO process with fellow marketers

-

Community

Discuss industry events, jobs, and news!

-

Digital Marketing

Chat about tactics outside of SEO

-

Research & Trends

Dive into research and trends in the search industry.

-

Support

Connect on product support and feature requests.

Related Questions

-

Best redirect destination for 18k highly-linked pages

Technical SEO question regarding redirects; I appreciate any insights on best way to handle. Situation: We're decommissioning several major content sections on a website, comprising ~18k webpages. This is a well established site (10+ years) and many of the pages within these sections have high-quality inbound links from .orgs and .edus. Challenge: We're trying to determine the best place to redirect these 18k pages. For user experience, we believe best option is the homepage, which has a statement about the changes to the site and links to the most important remaining sections of the site. It's also the most important page on site, so the bolster of 301 redirected links doesn't seem bad. However, someone on our team is concerned that that many new redirected pages and links going to our homepage will trigger a negative SEO flag for the homepage, and recommends instead that they all go to our custom 404 page (which also includes links to important remaining sections). What's the right approach here to preserve remaining SEO value of these soon-to-be-redirected pages without triggering Google penalties?

Technical SEO | | davidvogel1 -

Unsolved IP Address Indexed on Google along with Domain

My website is showing/indexed on Google search results twice, with IP address and domain name

Other SEO Tools | | mupetra

I'm using AWS LightSail

I don't know how to fix this, beside I'm afraid this duplicate can harm my website0 -

Google Not Indexing Pages (Wordpress)

Hello, recently I started noticing that google is not indexing our new pages or our new blog posts. We are simply getting a "Discovered - Currently Not Indexed" message on all new pages. When I click "Request Indexing" is takes a few days, but eventually it does get indexed and is on Google. This is very strange, as our website has been around since the late 90's and the quality of the new content is neither duplicate nor "low quality". We started noticing this happening around February. We also do not have many pages - maybe 500 maximum? I have looked at all the obvious answers (allowing for indexing, etc.), but just can't seem to pinpoint a reason why. Has anyone had this happen recently? It is getting very annoying having to manually go in and request indexing for every page and makes me think there may be some underlying issues with the website that should be fixed.

Technical SEO | | Hasanovic1 -

Google News and Discover down by a lot

Hi,

Technical SEO | | SolenneGINX

Could you help me understand why my website's Google News and Discover Performance dropped suddenly and drastically all of a sudden in November? numbers seem to pick up a little bit again but nowhere close what we used to see before then0 -

Sudden jump in the number of 302 redirects on my Squarespace Site

My Squarespace site www.thephysiocompany.com has seen a sudden jump in 302 redirects in the past 30 days. Gone from 0-302 (ironically). They are not detectable using generic link redirect testing sites and Squarespace have not explanation. Any help would be appreciated.

Technical SEO | | Jcoley0 -

Google Impressions Drop Due to Expired SSL

Recently I noticed a huge drop in our clients Google Impressions via GWMT from 900 impressions to 70 overnight on October 30, 2012 and has remained this way for the entire month of November 2012. The SSL Cert had expired in mid October due to the notification message for renewal going to the SPAM folder and being missed. Is it possible for an SSL expiry to be related to this massive drop in daily impressions which in-turn has also effected traffic? I also can't see any evidence of duplicate pages (ie. https and http) being indexed but to be honest I'm not the one doing the SEO therefore haven't been tracking this. Thanks for your help! Chris

Technical SEO | | MeMediaSEO0 -

Sudden ranking drop, no manual action

Sort of a strange situation I'm having and I wanted to see if I could get some thoughts. Here's what has happened... Monday morning, I realized that my website, which had been showing up at the bottom of page 2 for a specific result, had now been demoted to the bottom of page 6 (roughly a 40 spot demotion). No other keyword searches were affected. I immediately figured that this was some sort of keyword-specific penalty that I had incurred. I had done a bit of link building over the weekend (two or three directory type sites and a bio link from a site I contribute to). I also changed some anchor text on another site to match my homepage's title tag (which just so happened to be the exact phrase match I had dropped in) - I assumed this was what got me. I was slowly beginning to climb up the rankings and just got a bit impatient/overzealous. Changed the anchor text back to what it originally was and submitted a reconsideration request on Tuesday. This morning, I get the automated response in Webmaster Tools that no manual action had been taken. So my question is, would this drop have been an automated deal? If that's the case, then it's going to be mighty hard to pinpoint what I did wrong, since there's no way to know when I did whatever it was to cause the drop. Any ideas/thoughts/suggestions to regain my modest original placement?

Technical SEO | | sandlappercreative0